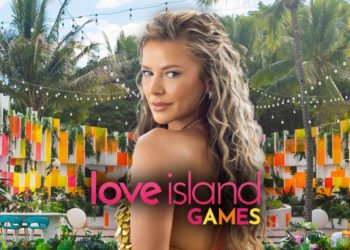

NBCU Heats Up Airwaves Across Broadcast & Streaming With Summer Hits ‘Love Island USA’ & ‘America’s Got Talent’

EXCLUSIVE: NBCUniversal has been bringing the heat across broadcast and streaming this summer thanks to the company’s unscripted heavyweights Love Island USA and America’s Got Talent. Love Island USA‘s successful ...