Enoch, one of the newer chatbots powered by artificial intelligence, promises “to ‘mind wipe’ the pro-pharma bias” from its answers. Another, Arya, produces content based on instructions that tell it to be an “unapologetic right-wing nationalist Christian A.I. model.”

Grok, the chatbot-cum-fact-checker embedded in X, claimed in one recent post that it pursued “maximum truth-seeking and helpfulness, without the twisted priorities or hidden agendas plaguing others.”

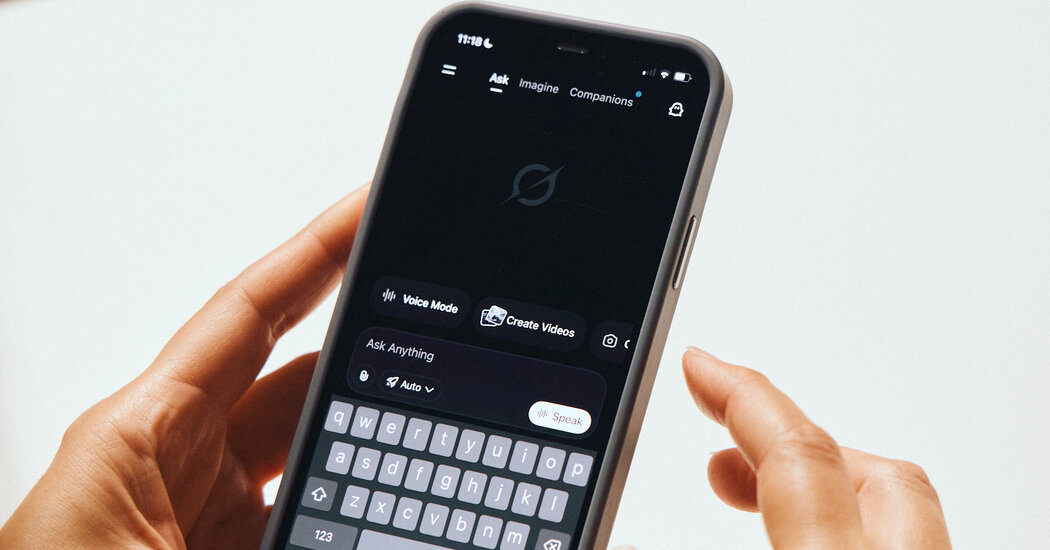

Ever since they burst onto the scene, A.I.-powered chatbots like OpenAI’s ChatGPT, Google’s Gemini and others have been pitched as dispassionate sources, trained on billions of websites, books and articles from across the internet in what is sometimes described as the sum of all human knowledge.

Those chatbots remain the most popular by far, but a suite of new ones are popping up to claim that they, in fact, are a better source of facts. They have become a new front in the war over what is true and false, replicating the partisan debate that already shadows much of mainstream and social media.

The New York Times tested several of them and found that they produced starkly different answers, especially on politically charged issues. While they often differed in tone or emphasis, some made contentious claims or flatly hallucinated facts. As the use of chatbots expands, they threaten to make the truth just another matter open for debate online.

“People will choose their flavors the way that we’ve chosen our media sources,” said Oren Etzioni, a professor emeritus at the University of Washington and founder of TrueMedia.org, a nonprofit that fights fake political content. When it comes to chatbots, he added, “I think the only mistake is believing that you’re getting facts.”

The post Right-Wing Chatbots Turbocharge America’s Political and Cultural Wars appeared first on New York Times.