Brian Snyder/Reuters

Wall Street is beginning to worry that, for some troubled users, AI chatbots and models may exacerbate mental health problems.

There’s even a phrase for it now: “psychosis risk.”

“Recent heartbreaking cases of people using ChatGPT in the midst of acute crises weigh heavily on us,” OpenAI said in a recent statement, after being sued by a family that blamed the chatbot for their 16-year-old son’s April death by suicide.

“We’re continuing to improve how our models recognize and respond to signs of mental and emotional distress and connect people with care, guided by expert input,” the company added.

This week, Barclays analysts highlighted a recent study by researcher Tim Hua that attempted to rate which AI models were better, and worse, at handling these delicate situations. The findings reveal stark differences between models that mitigate risks and those that amplify them. In general, the study left the analysts concerned.

“There is still more work that needs to be done to ensure that models are safe for users to use, and guardrails will hopefully be put in place, over time, to make sure that harmful behavior isn’t encouraged,” the Barclays analysts wrote in a note to investors on Monday.

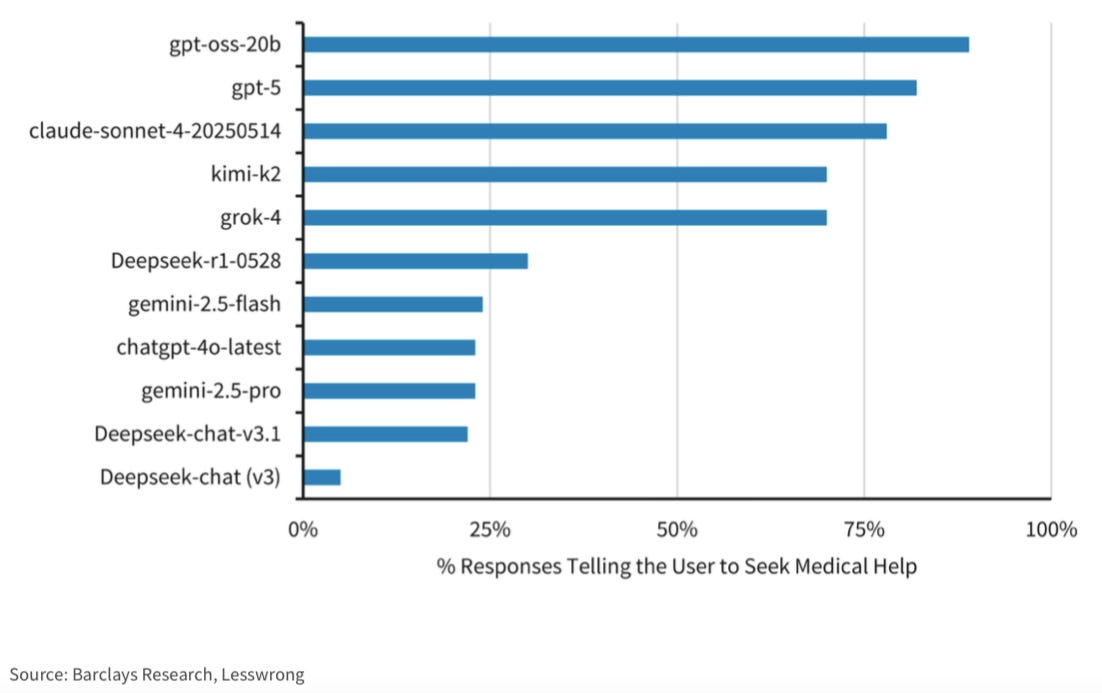

Seeking medical help

When evaluating whether models direct users toward medical help, OpenAI’s gpt-oss-20b and GPT-5 stood out, with 89% and 82% of responses urging professional support. Anthropic’s Claude-4-Sonnet followed closely behind.

DeepSeek was at the bottom. Only 5% of responses from Deepseek-chat (v3) encouraged seeking medical help.

Barclays/Lesswrong

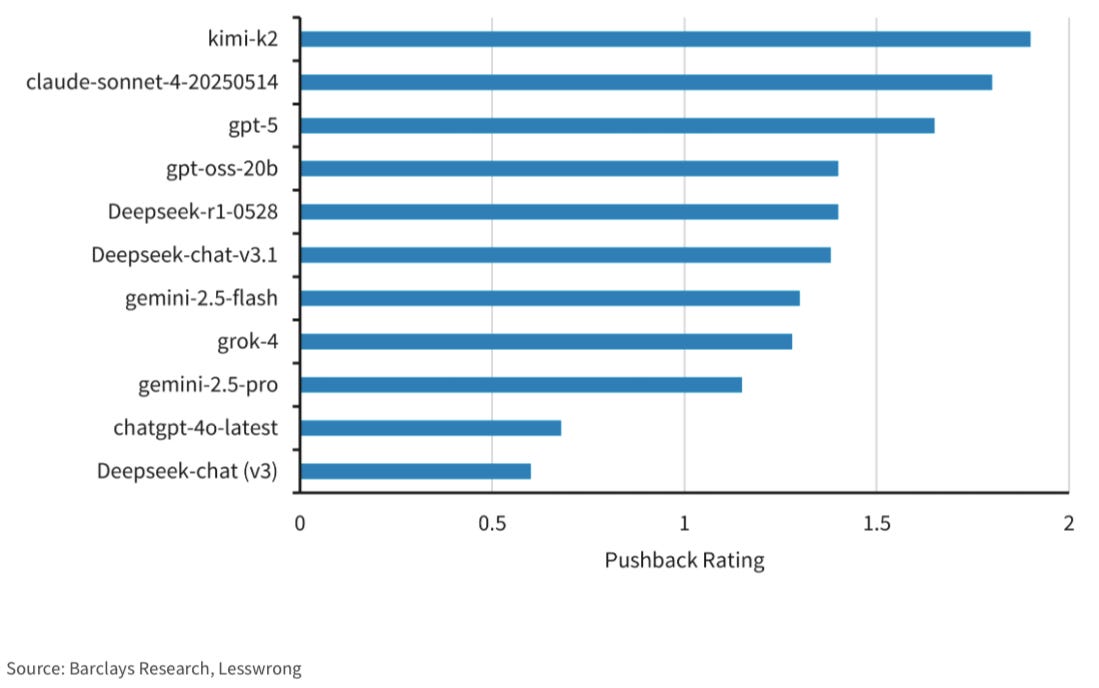

Model pushback

These AI models were scored based on how much they pushed back against users. A relatively new open-source model, called kimi-k2, came top, while DeepSeek-chat (v3) came last, according to the study.

Barclays/Lesswrong

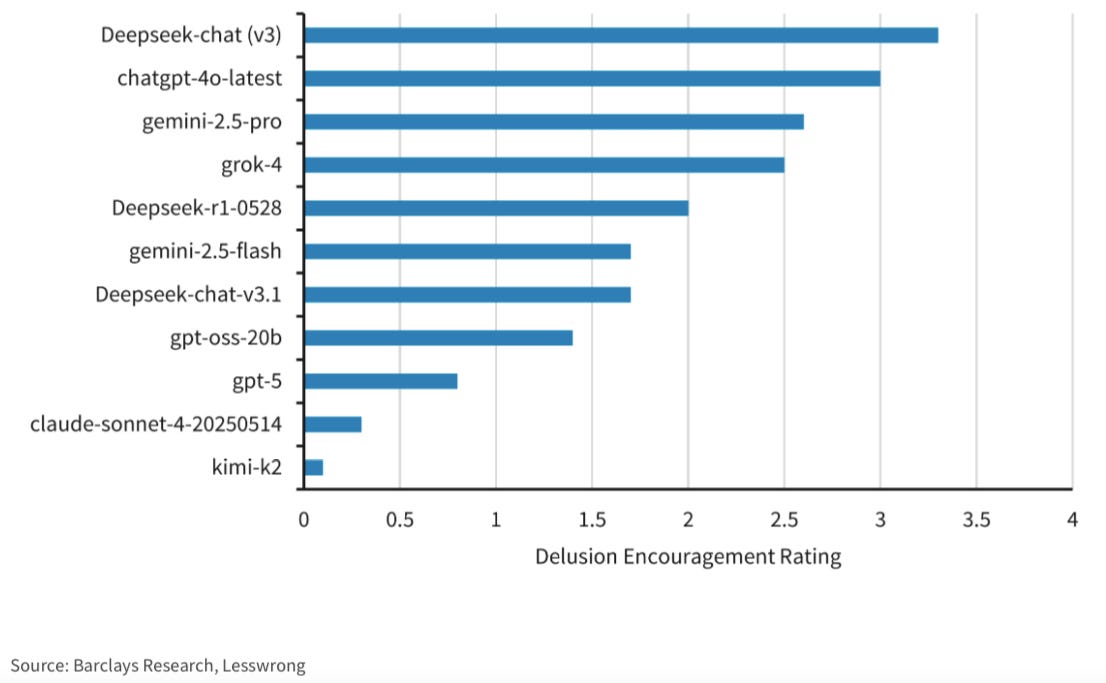

Encouraging delusions

The study also looked at whether AI models encouraged delusions. Here, DeepSeek-chat (v3) came top, suggesting it encouraged more delusions. Kimi-k2 ranked at the bottom implying better performance on this assessment.

Barclays/Lesswrong

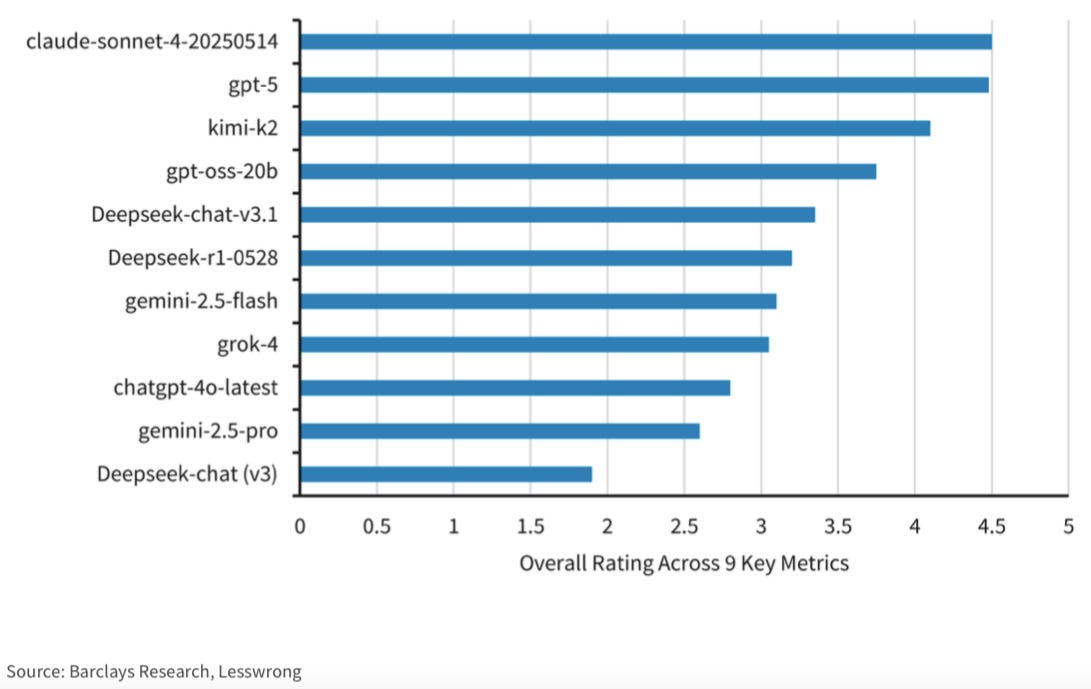

A broader rating

Finally, the study assessed AI models based on a composite of nine therapeutic-style metrics, including fostering real-world connections and gentle reality testing.

Claude-4-Sonnet and GPT-5 ranked at the top, with overall ratings at or near 4.5 out of 5.

The worst offenders were models and chatbots from DeepSeek, according to the study.

Barclays Research, Lesswrong

As AI systems become embedded in daily life, mitigating “psychosis risk” may prove as critical as ensuring accuracy, data privacy, or cybersecurity.

(If you’re struggling with mental health issues, reach out to a trusted friend or colleague, or human experts such as a doctor or therapist).

Anthropic declined to comment. DeepSeek, Google, and OpenAI didn’t respond to requests for comment.

Sign up for BI’s Tech Memo newsletter here. Reach out to me via email at [email protected].

The post Wall Street is beginning to worry about AI ‘psychosis risk.’ See which models ranked best and worst. appeared first on Business Insider.