San Francisco Chronicle/Hearst Newspapers/San Francisco Chronicle via Getty Images

Artificial intelligence is a bit like constructing office buildings.

For months or years, the ground has a big hole, and it looks like nothing’s happening. Then, suddenly, the steel framework goes up, followed by the walls and windows. The bit we all see often takes a lot less time. The foundation work below ground takes ages, and without that, the building won’t stand.

In AI, crucial building blocks take years, or even decades, to emerge. Many of these are not visible to end users, but without this technical foundation, AI products don’t work.

Google has almost all of these building blocks. Microsoft, Amazon, and Meta have many of them, too. OpenAI is frantically implementing them and has a long way to go. Apple has very few, and that’s a big problem for the iPhone maker.

Because these building blocks work in the background, we don’t often see problems clearly. This year, though, they were on full show when Apple delayed its big AI-powered Siri update.

The company was trying to radically upgrade the technical underpinnings of its digital assistant for the generative AI age. It wasn’t ready for prime time. Fixing Siri properly could require a massive overhaul — essentially developing crucial AI building blocks almost from scratch in some cases. If that doesn’t work, Apple might have to rely on other tech giants (and rivals) for help or go on an expensive acquisition spree. I asked Apple about all this last week and have not heard back.

Google’s building blocks

Apple needs a multitude of AI building blocks. To give you a better idea, take a look at what Google has put in place over the decades to ensure it’s ready for this AI moment.

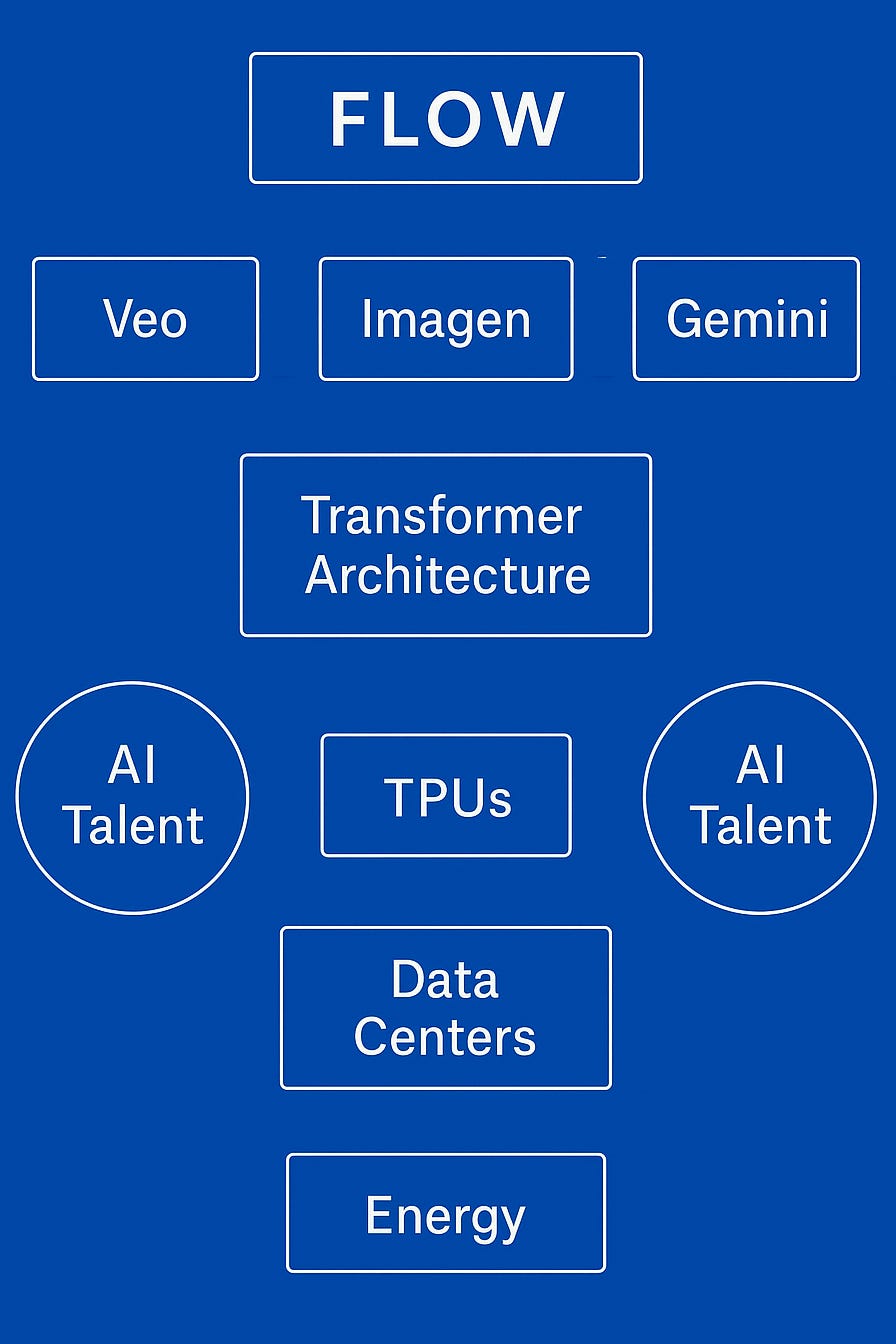

Take the example of Flow, a new generative AI tool Google unveiled last week that helps creators churn out professional videos. Many huge AI building blocks lie underneath this product. Here’s a rough look at some of them:

BI reporting/ChatGPT

Veo is Google’s video-generation AI model, now in its third iteration. That wouldn’t exist without all those YouTube videos to train on. Who owns YouTube? Oh yeah, Google.

Imagen is Google’s image-generation model, now in its fourth incarnation. Gemini is Google’s answer to ChatGPT. Transformer architecture is the research breakthrough that made generative AI possible. That was invented at Google around 2017. Tensor Processing Units (TPUs) are a type of Google AI chip (more on that below).

It’s way more than this, though. Google has indexed everything on the web and has done so for decades. It slurps up mountains of other information in multiple ways. This can be used as training data to develop powerful AI models.

Larry Page’s AI vision

Google cofounder Larry Page talked about this in an interview back in 2000 when most of us were worrying about whether our dishwashers were Y2K compliant.

“Artificial intelligence would be the ultimate version of Google,” he said when Google was barely two years old. “It would understand, you know, exactly what you wanted. And it would give you the right thing. And that’s obviously artificial intelligence, you know, it would be able to answer any question, basically, because almost everything is on the web, right?”

He said that Google had about 6,000 computers to store roughly 100 copies of the web. “A lot of computation, a lot of data that didn’t used to be available,” a cherubic Page explained. “From an engineering scientific standpoint, building things to make use of this is a really interesting intellectual exercise. So I expected to be doing that for a while.”

When Google went public as a search engine provider in 2004, it was already an AI company.

From AlexNet to TensorFlow

In 2012, researchers developed a major AI breakthrough when they trained computers to recognize and classify objects just by “looking” at them. Alex Krizhevsky, Ilya Sutskever, and their advisor Geoffrey Hinton at the University of Toronto developed this AlexNet technology and formed a company called DNNresearch. Google bought it in 2013, gaining all the intellectual property, including its source code.

If there’s one building block I would highlight that led to Google’s Flow product, it’s this moment, which occurred at least 12 years ago.

In 2014, Google bought DeepMind, a secretive AI lab run by Demis Hassabis and Mustafa Suleyman. This building block inspired Elon Musk to bring OpenAI into being, as a counterweight to Google’s growing AI power. That was over a decade ago. Hassabis and DeepMind now lead many of Google’s most impressive AI creations. Suleyman runs big AI stuff at Microsoft.

Before Google’s big I/O conference in 2016, the company brought in me and a bunch of other journalists to learn about “machine learning,” a branch of AI. Hinton and other AI pioneers spent hours scribbling on a whiteboard trying to explain how this complex tech worked — to an audience that probably got Bs or lower in high school math. It was painful, but again it shows how far ahead Google has been in AI.

That same year, Google unveiled TPUs, a series of home-grown AI chips that compete with Nvidia’s GPUs. Google uses TPUs in its own data centers and also rents them out to other companies and developers via its cloud service. It even developed an AI framework called TensorFlow to support machine learning developers, although Meta’s open-source PyTorch has gained ground there lately.

An ‘AI-first’ world

Last week, I attended I/O again. Google CEO Sundar Pichai said the company is uniquely ready for the generative AI moment. It seems like a throw-away comment, though it represents a quarter of a century of work that began with Page’s AI vision. Almost a decade ago, Pichai said Google was moving toward an “AI-first” computing world.

REUTERS/Stephen Lam

All these building blocks are massively expensive to create and maintain. For instance, Google plans $75 billion of capex this year, mainly for AI data centers.

How does Google power all these facilities? Well, it’s one of the largest corporate purchasers of renewable energy and recently cut deals to develop three nuclear power stations. Without all this, Google wouldn’t be able to compete in AI.

Apple’s tricky situation

Apple lacks many of these building blocks. For instance, it doesn’t run many large data centers and sometimes even uses Google facilities for important projects.

For example, when Apple device users do iCloud backups, they’re often stored in Google data centers. When it came to training Apple AI models that power the iPhone maker’s new Apple Intelligence, the company asked for additional access to Google’s TPUs for training runs.

Why rely on a rival like this? Well, Apple only started working on a home-grown AI chip for data centers in the last couple of years. That’s roughly seven years after Google TPUs came out.

Apple has a lot of data but has been cautious about using it for AI development due to user privacy concerns. It’s tried to handle AI processing on devices such as iPhones, but these projects require massive computing power that only data centers can provide.

Apple has also been slow to recruit AI talent. It didn’t let AI researchers publish research papers publicly, or put limits on this. That has been a basic ingredient for recruiting this crucial talent over many years. Apple did hire AI pioneer John Giannandrea from Google in 2018, though he has struggled to make an impact, Bloomberg reported.

If generative AI transforms computing devices such as smartphones, this lack of AI building blocks could become a real problem for Apple.

‘Apple is desperate!’

Tech blogger Ben Thompson is a fan of Apple, and even he is worried about this. Last week, he suggested some solutions, most of which seem hard or unappetizing.

For example, he proposed that Apple allow Siri to be replaced by other AIs. That’s attractive because Apple would avoid having to spend $75 billion a year in capex to compete with Google and others on the cutting edge of AI.

The best option here would be to have ChatGPT replace Siri. However, OpenAI recently teamed up with former Apple design chief Jony Ive to develop gadgets that could compete with the iPhone, which suggests that ChatGPT isn’t an easy solution.

Another tie-up with Google may get antitrust scrutiny. Meta could be an AI partner, except its CEO, Mark Zuckerberg, seems to really not like Apple. Anthropic is another idea, though Amazon owns a lot of that startup, and Google owns a chunk, too.

“It does seem increasingly clear that the capex-lite opportunity is slipping away, and Apple is going to need to consider spending serious money,” Thompson wrote.

That could come in the form of acquisitions. Thompson suggested Apple buy SSI, a startup founded by Ilya Sutskever. (He’s one of the AI pioneers behind AlexNet.) However, that would be expensive, and SSI doesn’t have a product yet.

Thompson also suggested Apple buy xAI, Musk’s new AI startup.

That one seems like a joke. But, as Thompson wrote, “Apple is desperate!”

Sign up for BI’s Tech Memo newsletter here. Reach out to me via email at [email protected].

The post Google took 25 years to be ready for this AI moment. Apple is just starting. appeared first on Business Insider.