A few weeks ago, a high school student emailed Martin Hairer, a mathematician known for his startling creativity. The teenager was an aspiring mathematician, but with the rise of artificial intelligence, he was having doubts. “It is difficult to understand what is really happening,” he said. “It feels like every day these models are improving, and sooner rather than later they will render us useless.”

He asked: “If we have a machine that is significantly better than us at solving problems, doesn’t mathematics lose a part of its magic?”

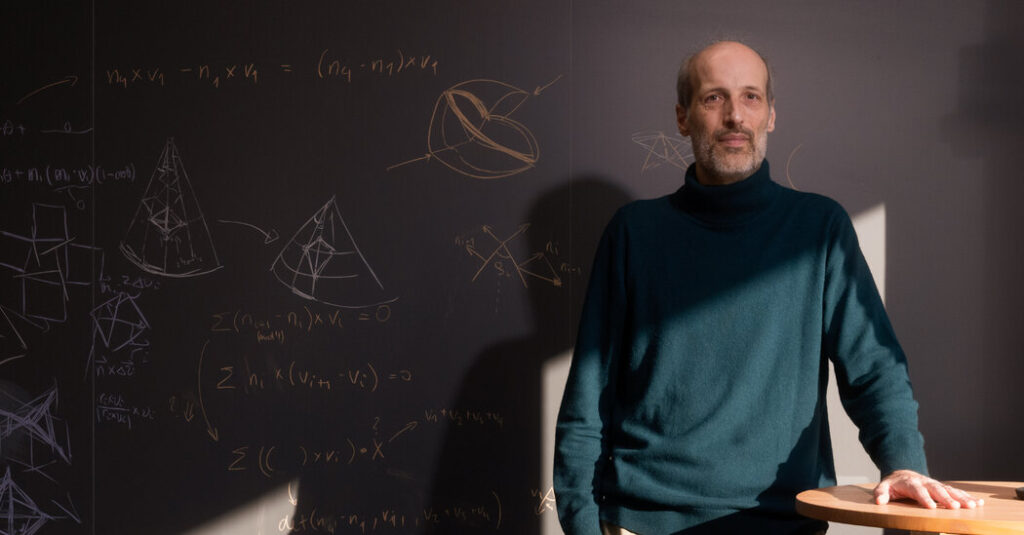

Dr. Hairer, who in 2014 won a Fields Medal, the most prestigious prize in mathematics, and in 2021 won the lucrative Breakthrough Prize, splits his time between the Swiss Federal Technology Institute of Lausanne and Imperial College London. Responding to the student, he observed that many fields were grappling with the prospect of A.I.-induced obsolescence.

“I believe that mathematics is actually quite ‘safe,’” Dr. Hairer said. He noted that large-language models, or L.L.M.s, the technology at the heart of chatbots, are now quite good at solving made-up problems. But, he said, “I haven’t seen any plausible example of an L.L.M. coming up with a genuinely new idea and/or concept.”

Dr. Hairer mentioned this exchange while discussing a new paper, titled “First Proof,” that he cowrote with several mathematicians, including Mohammed Abouzaid of Stanford University; Lauren Williams of Harvard University; and Tamara Kolda, who runs MathSci.ai, a consultancy in the San Francisco Bay Area.

The paper describes a recently begun experiment that collects genuine test questions, drawn from unpublished research by the authors, in an effort to provide a meaningful measure of A.I.’s mathematical competency.

The authors hope that the investigation will add nuance to the oft-hyperbolic narrative around the field of mathematics being “solved” by A.I., and that it will mitigate the consequences of the hype, such as scaring away next-generation students and deterring research funders.

“While commercial A.I. systems are undoubtedly already at a level where they are useful tools for mathematicians,” the authors wrote, “it is not yet clear where A.I. systems stand at solving research-level math questions on their own, without an expert in the loop.”

A.I. companies use what some mathematicians describe as “contrived” or “restrictive” problems for evaluating and benchmarking how well L.L.M.s fare when operating without human help. Occasionally, mathematicians are invited to contribute and paid some $5,000 per problem. (None of the First Proof project authors have any ties to A.I. companies.)

Last April, Dr. Abouzaid, who in 2017 won the New Horizons in Mathematics Prize, declined one such invitation. “I thought there should be a broader, independent and public effort,” he said. He added that First Proof is the first-round attempt.

“The goal is to get an objective assessment of the research capabilities of A.I.,” said Dr. Williams, a recent Guggenheim fellow and MacArthur fellow.

For the experiment, the authors — representing a diversity of mathematical fields — each contributed one test question that arose from research they had in the works but had not yet unpublished. They also determined the answers; these solutions are encrypted online and will be released on Feb. 13.

“The goal here is to understand the limits — how far can A.I. go beyond its training data and the existing solutions it finds online?” said Dr. Kolda, who is one of few mathematicians to be elected a member of the National Academy of Engineering.

The team conducted preliminary tests on OpenAI’s ChatGPT-5.2 Pro and Google’s Gemini 3.0 Deep Think. When given one shot to produce the answer, the authors wrote, “the best publicly available A.I. systems struggle to answer many of our problems.”

The paper’s introduction offers an explanation of its title: “In baking, the first proof, or bulk fermentation process, is a crucial step in which one lets the entire batch of dough ferment as one mass, before dividing and shaping it into loaves.” Having published the first batch of test-problems, the team invites the mathematical community to explore. And then in a few months, after input and ideas ferment, there will be a second, more structured benchmarking round with a new batch of problems.

The team published their First Proof paper just in time for Euler Day — Saturday, Feb. 7 — named after the 18th-century Swiss mathematician Leonhard Euler. The date corresponds to Euler’s number, a versatile mathematical constant, like pi; it is roughly equal to 2.71828… and denoted by “e.” The training of neural-network A.I. systems is based on a technique that Euler discovered for solving ordinary differential equations, known as Euler’s method.

The following conversation was conducted by videoconference and email, and condensed and edited for clarity.

How is the “First Proof” method novel compared with other benchmarking efforts?

MOHAMMED ABOUZAID The main novelty is that our test questions are actually taken from our own research — we start with things that we care about. Within that space, we try to formulate questions we can test.

What makes for a testable question?

Current A.I. systems have certain well-established limitations. For one, they are notoriously bad at visual reasoning, so we avoided that sort of question; if our goal was to be adversarial, we would ask a question that involved a picture. And companies limit the model’s response lengths in one go, because the quality of the answer degrades beyond a certain point, so we made sure to avoid queries whose answers require more than five pages.

The paper is careful to clarify “what mathematics research is.” What is it?

ABOUZAID Often in modern research, the key step is to identify the big motivating question, the direction from which the problem should be approached. It involves all kinds of preliminary work, and this is where mathematical creativity takes place.

Once problems are solved, mathematicians tend to evaluate the importance of research contributions in terms of the questions that arise. Sometimes, resolving a conjecture one way is seen as disappointing, because it forecloses the possibility that there would be new questions to investigate.

LAUREN WILLIAMS Let me make a loose analogy. In experimental science, I might divide the components of research into three parts: One, come up with the big question, whose study we hope will shed light on our field. Two, design an experiment to answer the question. Three, perform the experiment and analyze the results.

I can similarly divide math research into parallel parts: One, come up with the big question, whose study we hope will guide our field. Two, develop a framework for finding a solution, which involves dividing the big question into smaller more tractable questions — like our test questions. Three, find solutions to these smaller questions and prove they are correct.

All three parts are essential. In our First Proof project, we focused on the third component because it is the most measurable. We can query the A.I. model with small, well-defined questions, and then assess whether its answers are correct. If we were to ask an A.I. model to come up with the big question, or a framework, it would be much harder to evaluate its performance.

How did the A.I. systems fare on the “first proof” evaluation?

WILLIAMS One test on my problem produced an interesting series of responses. The model would come up with an answer, and say, “OK, this is the final solution.” Then it would say, “Wait, stop, what about this?” and modify its answer in some way. And so on: “OK, here’s the final solution. Wait, there’s a catch!” It went into an infinite loop.

Another response gave an answer to a closely related but different question.

TAMARA KOLDA My preliminary results were disappointing in that the A.I. just was confused about the problem, ignoring key information in some parts of the answer, but not even being consistent. I’ve since revised the problem statement and added some more explicit instructions to try to give the A.I. a better chance. So, we’ll see how it goes with the final results.

MARTIN HAIRER One thing I noticed, in general, was that the model tended to give a lot of details on the things that were easy, where you would be like: “Yeah, sure, go a bit faster. I’m bored with what you’re saying.” And then it would give very little detail with the crux of the argument. Sometimes it would be like reading a paper by a bad undergraduate student, where they sort of know where they’re starting from, they know where they want to go, but they don’t really know how get there. So they wander around here and there, and then at some point they just stick in “and therefore” and pray.

Sounds like the classic hand-waving — lacking rigor, skipping over complexities.

HAIRER Yeah, it’s pretty good at giving hand-wavy answers.

So, you weren’t impressed?

HAIRER No, I wouldn’t say that. At times I was actually quite impressed — for example, with the way it could string together a bunch of known arguments, with a few calculations in between. It was really good at doing that correctly.

In your dream world, what would the A.I. be doing for you?

HAIRER Currently the output of the L.L.M.’s is hard to trust. They display absolute confidence, but it requires a lot of effort to convince yourself whether their answers are correct or not; I find it intellectually painful. Again, it’s like a graduate student where you don’t quite know whether they are strong or whether they’re just good at B.S. The ideal thing would be a model that you can trust.

KOLDA A.I. is touted as being like a colleague or a collaborator, but I don’t find it to be true. My human colleagues have particular outlooks, and I especially enjoy when we debate different points of views. An A.I. has whatever viewpoint I tell it to have, which is not interesting at all!

One of my growing concerns is that A.I. could inadvertently slow scientific progress. The theoretical physicist Max Planck is often credited with saying that “science advances one funeral at a time.” I am mindful that I may be quite wrong in my viewpoints. However, if my opinion becomes encoded into A.I. systems and persists indefinitely, will it hinder the evolution of new scientific ideas?

The post These Mathematicians Are Putting A.I. to the Test appeared first on New York Times.