A German plant scientist says two years of academic work vanished in an instant after he clicked the wrong setting in ChatGPT. Let this be a lesson to you all: don’t treat ChatGPT like it’s your desktop PC hard drive.

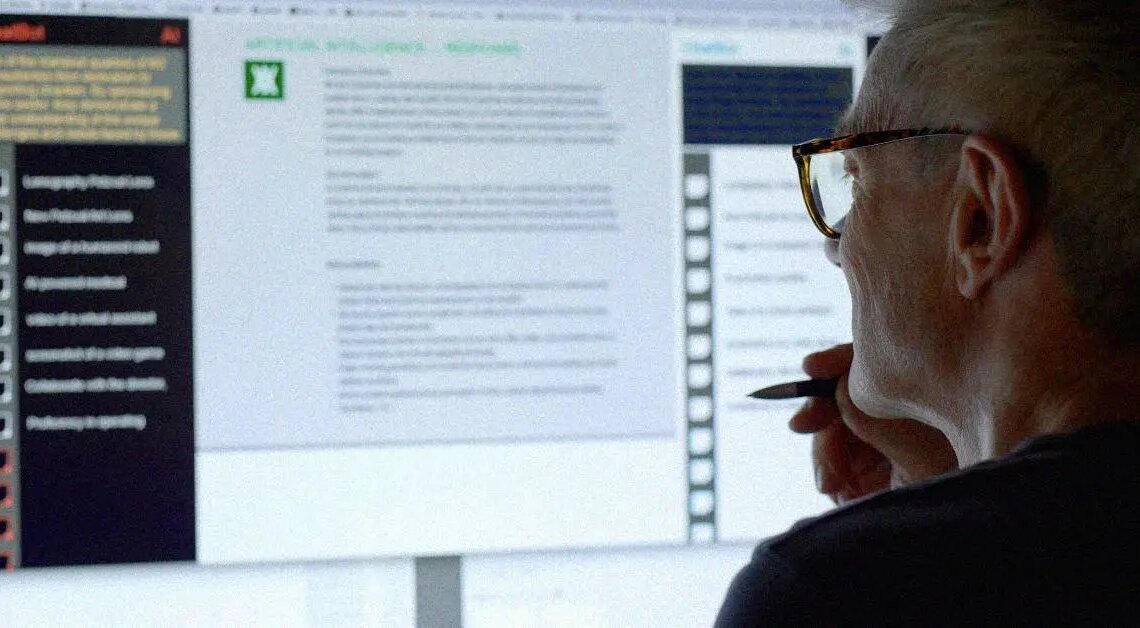

Writing in Nature, Marcel Bucher, a professor at the University of Cologne, spills a lot of ink on his way toward telling people that he’s basically massively addicted to ChatGPT. He’s incorporated the chatbot into nearly every part of his professional life.

He says it helps him draft emails, analyze student responses, revise papers, plan lectures, and assemble grant applications. He seems to have forgotten how to do anything on his own, and that heavy reliance came back to bite him in the a—.

He thought of ChatGPT as a stable workplace, less a tool and more like a digital office where everything, he was certain, would stay put. Reader, it did not.

A Small ChatGPT Settings Change Cost a Scientist Years of Work

In August 2025, while experimenting with ChatGPT’s data consent options, Bucher temporarily disabled data sharing to see whether the model would still function. It didn’t. Instead, every chat and project folder he had built over two years instantly disappeared.

There was no prompt confirming the choice and no option for recovery. One button click and everything two years of work vanished into thin air.

Followed all the usual troubleshooting steps Google searches (or ChatGPT queries) suggest. Nothing worked. He even contacted OpenAI, the company behind ChatGPT. They told him there was nothing he could do.

The permanent deletion was not a bug; it’s a feature. By design, wiping out data sharing also wipes out chat history. It’s a nuclear option from wiping your ChatGPT history clean and starting fresh.

Online reaction ranges from unsympathetic to people pointing while delivering their best Nelson-style “Ha ha!” Unfortunately, Bucher’s case is part of a broader problem: universities pushing faculty to incorporate unproven AI tools into their workflows.

While he is getting mocked mercilessly across the internet, all Bucher wants is at least a little more warning before a single button click can detonate everything you’ve been working on for years. Of course, there is the larger point that ChatGPT isn’t safe for professional use, as it hardly seems safe for any use.

The post Scientist Loses Years of Work After Tweaking ChatGPT Settings appeared first on VICE.