The big AI companies promised us that 2025 would be “the year of the AI agents.” It turned out to be the year of talking about AI agents, and kicking the can for that transformational moment to 2026 or maybe later. But what if the answer to the question “When will our lives be fully automated by generative AI robots that perform our tasks for us and basically run the world?” is, like that New Yorker cartoon, “How about never?”

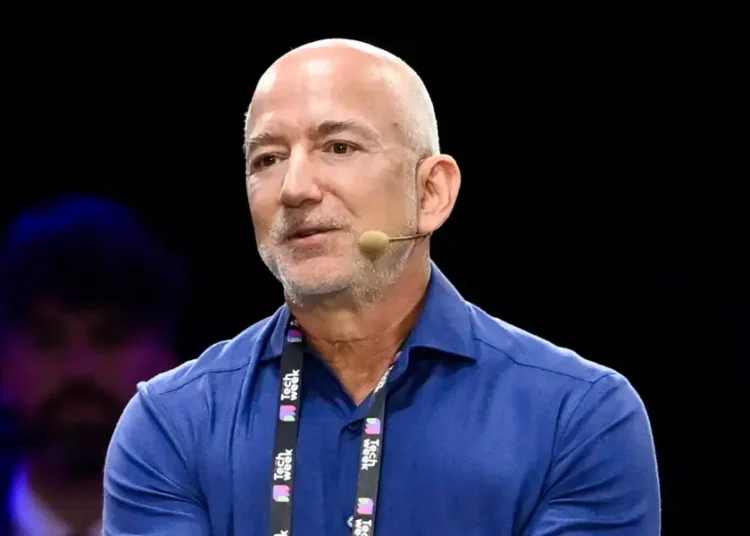

That was basically the message of a paper published without much fanfare some months ago, smack in the middle of the overhyped year of “agentic AI.” Entitled “Hallucination Stations: On Some Basic Limitations of Transformer-Based Language Models,” it purports to mathematically show that “LLMs are incapable of carrying out computational and agentic tasks beyond a certain complexity.” Though the science is beyond me, the authors—a former SAP CTO who studied AI under one of the field’s founding intellects, John McCarthy, and his teenage prodigy son—punctured the vision of agentic paradise with the certainty of mathematics. Even reasoning models that go beyond the pure word-prediction process of LLMs, they say, won’t fix the problem.

“There is no way they can be reliable,” Vishal Sikka, the dad, tells me. After a career that, in addition to SAP, included a stint as Infosys CEO and an Oracle board member, he currently heads an AI services startup called Vianai. “So we should forget about AI agents running nuclear power plants?” I ask. “Exactly,” he says. Maybe you can get it to file some papers or something to save time, but you might have to resign yourself to some mistakes.

The AI industry begs to differ. For one thing, a big success in agent AI has been coding, which took off last year. Just this week at Davos, Google’s Nobel-winning head of AI, Demis Hassabis, reported breakthroughs in minimizing hallucinations, and hyperscalers and startups alike are pushing the agent narrative. Now they have some backup. A startup called Harmonic is reporting a breakthrough in AI coding that also hinges on mathematics—and tops benchmarks on reliability.

Harmonic, which was cofounded by Robinhood CEO Vlad Tenev and Tudor Achim, a Stanford-trained mathematician, claims this recent improvement to its product called Aristotle (no hubris there!) is an indication that there are ways to guarantee the trustworthiness of AI systems. “Are we doomed to be in a world where AI just generates slop and humans can’t really check it? That would be a crazy world,” says Achim. Harmonic’s solution is to use formal methods of mathematical reasoning to verify an LLM’s output. Specifically, it encodes outputs in the Lean programming language, which is known for its ability to verify the coding. To be sure, Harmonic’s focus to date has been narrow—its key mission is the pursuit of “mathematical superintelligence,” and coding is a somewhat organic extension. Things like history essays—which can’t be mathematically verified—are beyond its boundaries. For now.

Nonetheless, Achim doesn’t seem to think that reliable agentic behavior is as much an issue as some critics believe. “I would say that most models at this point have the level of pure intelligence required to reason through booking a travel itinerary,” he says.

Both sides are right—or maybe even on the same side. On one hand, everyone agrees that hallucinations will continue to be a vexing reality. In a paper published last September, OpenAI scientists wrote, “Despite significant progress, hallucinations continue to plague the field, and are still present in the latest models.” They proved that unhappy claim by asking three models, including ChatGPT, to provide the title of the lead author’s dissertation. All three made up fake titles and all misreported the year of publication. In a blog about the paper, OpenAI glumly stated that in AI models, “accuracy will never reach 100 percent.”

Right now, those inaccuracies are serious enough to discourage the wide adoption of agents in the corporate world. “The value has not been delivered,” says Himanshu Tyagi, cofounder of an open source AI company called Sentient. He points out that dealing with hallucinations can disrupt an entire work flow, negating much of the value of an agent.

Yet the big AI powers and many startups believe these inaccuracies can be dealt with. The key to coexisting with hallucinations, they say, is creating guardrails that filter out the imaginative bullshit that LLMs love to produce. Even Sikka thinks that this is a probable outcome. “Our paper is saying that a pure LLM has this inherent limitation—but at the same time it is true that you can build components around LLMs that overcome those limitations,” he says.

Achim, the mathematical verification guy, agrees that hallucinations will always be around—but considers this a feature, not a bug. “I think hallucinations are intrinsic to LLMs and also necessary for going beyond human intelligence,” he says. “The way that systems learn is by hallucinating something. It’s often wrong, but sometimes it’s something that no human has ever thought before.”

The bottom line is that like generative AI itself, agentic AI is both impossible and inevitable at the same time. There may not be a specific annum that will be looked back upon as “the year of the agent.” But hallucinations or not, every year from now on is going to be “the year of more agents,” as the delta between guardrails and hallucinations narrows. The industry has too much at stake not to make this happen. The tasks that agents perform will always require some degree of verification—and of course people will get sloppy and we’ll suffer small and large disasters—but eventually agents will match or surpass the reliability of human beings, while being faster and cheaper.

At that point, some bigger questions arise. One person I contacted to discuss the hallucination paper was computer pioneer Alan Kay, who is friendly with Sikka. His view is that “their argument was posed well enough to get comments from real computational theorists.” (A statement reminiscent of his 1984 take on the Macintosh as “the first personal computer good enough to be criticized.”) But ultimately, he says, the mathematical question is beside the point. Instead, he suggests people consider the issue in light of Marshall McLuhan’s famous “Medium is the message” dictum. “Don’t ask whether something is good or bad, right or wrong,” he paraphrases. “Find out what is going on.”

Here’s what’s going on: We may well be on the cusp of a massive automation of human cognitive activity. It’s an open question whether this will improve the quality of our work and our lives. I suspect that the ultimate assessment of that will not be mathematically verifiable.

This is an edition of Steven Levy’s Backchannel newsletter. Read previous newsletters here.

The post The Math on AI Agents Doesn’t Add Up appeared first on Wired.