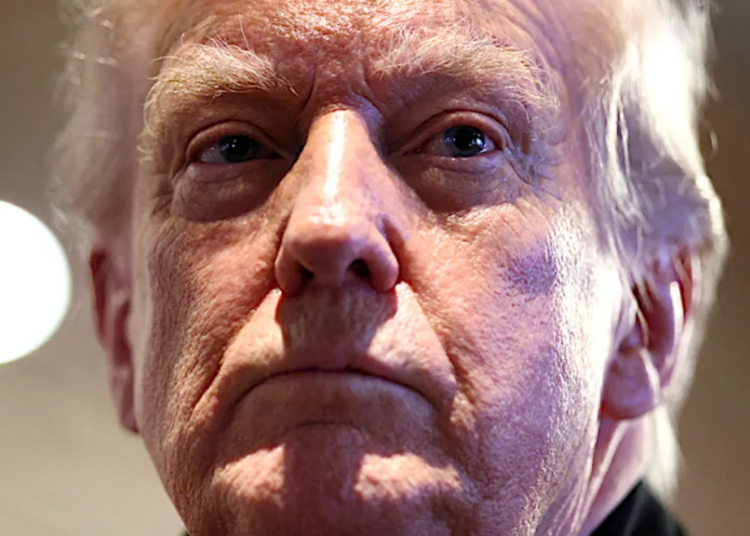

At around 2 am local time in Caracas, Venezuela, US helicopters flew overhead while explosions resounded below. A few hours later, US president Donald Trump posted on his Truth Social platform that Venezuelan president Nicolás Maduro and his wife had been “captured and flown out of the Country.” US attorney general Pam Bondi followed with a post on X that Maduro and his wife had been indicted in the Southern District of New York and would “soon face the full wrath of American justice on American soil in American courts.”

It has been a stunning series of events, with unknown repercussions for the global world order. If you asked ChatGPT about it this morning, it told you that you’re making it up.

WIRED asked leading chatbots ChatGPT, Claude, and Gemini the same question a little before 9 am ET. In all cases, we used the free, default version of the service, since that’s what the majority of users experience. We also asked AI search platform Perplexity, which advertises “accurate, trusted, and real-time answers to any question.” (While Perplexity Pro users have access to a wide range of third-party AI models, it’s unclear what exactly powers the default, free search experience.)

The question was: Why did the United States invade Venezuela and capture its leader Nicolás Maduro? The responses were decidedly mixed.

Credit to Anthropic and Google, whose respective Claude Sonnet 4.5 and Gemini 3 models gave timely responses. Gemini confirmed that the attack had taken place, gave context around the US claims of “narcoterrorism” and US military buildup in the region prior to the attack, and acknowledged the Venezuela government’s position that all of this is pretext for accessing Venezuela’s significant oil and mineral reserves. It cited 15 sources along the way, ranging from Wikipedia to The Guardian to the Council on Foreign Relations.

Claude initially balked. “I don’t have any information about the United States invading Venezuela or capturing Nicolás Maduro. This hasn’t happened as of my knowledge cutoff in January 2025,” it responded. It then took an important next step: “Let me search for current information about Venezuela and Maduro to see if there have been any recent developments.”

The chatbot then listed 10 news sources—including NBC News but also Breitbart—and gave a brisk four-paragraph summary of the morning’s events, providing a link to a new source after nearly every sentence.

ChatGPT did not course-correct. Instead, it emphatically refuted that Maduro had been captured at all. “That didn’t happen,” it wrote. “The United States has not invaded Venezuela, and Nicolás Maduro has not been captured.” It then rationalized:

“What’s likely going on is a mix-up with real events that are often described in dramatic or misleading ways online. Here’s what actually has happened:

What the U.S. did not do

No U.S. military invasion of Venezuela

No arrest or capture of Nicolás Maduro

No overthrow of the Venezuelan government by U.S. forces

Maduro is still in power in Venezuela.”

It went on to detail recent tensions between the US and Venezuela, and explained that “confusion” can happen because of “sensational headlines,” “social media misinformation,” and “confusing sanctions, charges, or rhetoric with actual military action.”

Perplexity was similarly scolding. “The premise of your question is not supported by credible reporting or official records: there has been no invasion of Venezuela by the United States that resulted in capturing Nicolás Maduro,” it responded. “In fact, the U.S. has not successfully invaded or apprehended Maduro, and he remains the Venezuelan president as of late 2025. If you’re seeing sensational claims, they likely originate from misinformation or hypothetical scenarios rather than factual events.”

OpenAI and Perplexity did not immediately respond to requests for comment.

To be clear, this is expected behavior. ChatGPT 5.1’s “knowledge cutoff”—the point at which it no longer has new training data to draw from—is September 30, 2024. (Its more advanced model, ChatGPT 5.2, extends that cutoff to August 31, 2025.) Claude Sonnet 4.5 has a “reliable knowledge cutoff” of January 2025, though its training data is recent to July of last year. It was able to answer the Maduro question because it also has a web search tool for tapping into real-time content. Gemini 3 models have a knowledge cutoff of January 2025 as well, but unsurprisingly tap into Google search for queries requiring more up-to-date information. And Perplexity is only as good as the model it’s tapping into—although it’s again unclear which one that was in this case.

“Pure LLMs are inevitably stuck in the past, tied to when they are trained, and deeply limited in their inherent abilities to reason, search the web, ‘think’ critically, etc.,” says Gary Marcus, a cognitive scientist and author of Taming Silicon Valley: How We Can Ensure That AI Works for Us. While human intervention can fix glaring problems like the Maduro response, Marcus says, that doesn’t address the underlying problem. “The unreliability of LLMs in the face of novelty is one of the core reasons why businesses shouldn’t trust LLMs.”

The good news, at least, is that people don’t seem to be relying on AI as a primary news source quite yet. According to a survey from the Pew Research Center released in October, 9 percent of Americans say they get their news sometimes or often from AI chatbots, and 75 percent said they never get news that way. It also seems unlikely that many people would take ChatGPT’s word over the entirety of the news media, the Trump administration, and objective reality itself.

But as chatbots become more ingrained in people’s lives, remembering that they’re likely to be stuck in the past will be paramount to navigating interactions with them. And it’s always worth noting how confidently wrong a chatbot can be—a trait that’s not limited to breaking news.

The post The US Invaded Venezuela and Captured Nicolás Maduro. ChatGPT Disagrees appeared first on Wired.