Welcome back to In the Loop, TIME’s new twice-weekly newsletter about AI. We’re publishing these editions both as stories on Time.com and as emails. If you’re reading this in your browser, why not subscribe to have the next one delivered straight to your inbox?

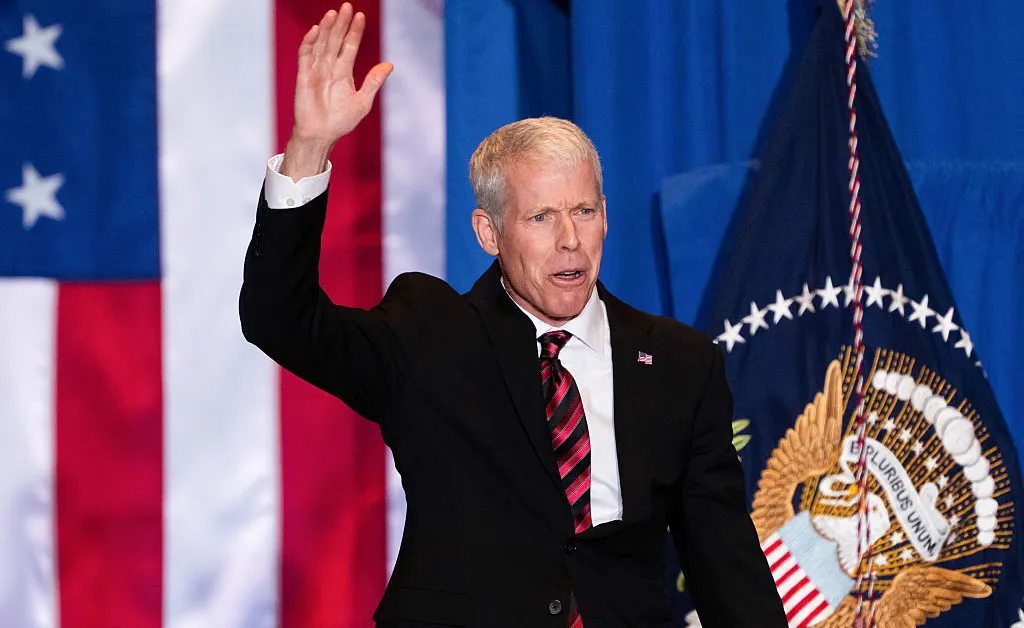

Who to Know: Energy Secretary Chris Wright

[time-brightcove not-tgx=”true”]

Last month, I interviewed Trump’s Energy Secretary Chris Wright for TIME’s Person of the Year feature: The Architects of AI. Wright, who came from the private sector, has now staked much of his legacy on AI acceleration. In our interview, he highlighted AI’s role in advancing crucial scientific research and downplayed climate risks. These are excerpts of our conversation, lightly edited for clarity, with annotations.

How big of a priority is the AI race and the AI infrastructure buildout to your mission in office?

It’s the No. 1 scientific priority of the Trump administration. We’ll be the leaders in that effort, but it is across the administration.

There’s huge upside. Our national labs are applying AI as fast as we can to scientific discovery. One big thing is now you can take cancer or tumors and look at them and not just do tests and what they respond to, but actually understand their molecular structure. You can design molecules that can combat that ability to reproduce or grow. Our hope is in the next few years, a lot of cancers that are death sentences today become manageable conditions, enabled by AI.

[Note: A group of oncologists wrote a paper about the limits of AI in cancer research, writing that while it is leading to “innovations in tumor detection, treatment planning, and patient management,” risks include “potential over-reliance on technology, which may undermine clinical expertise.”]

How important is un-retiring old coal plants to your mission to power AI data centers?

Very important. We won’t keep open all of them. But maybe, the significant majority of all the plants that are slated to close are closing for political reasons. The politicians or the regulators agreed to shut down a coal plant 15 years before its useful life ended. If we want to add net-generating capacity as fast as we can, one of the things we can do is stop digging the hole.

[Note: The U.S. Energy Information Administration (EIA) wrote in 2023 that many coal plants were being retired because they struggled to compete in the market with “highly efficient, modern natural gas-fired power plants and low-cost renewables.” But as AI has drastically increased demand, this calculus has changed.]

So as all these data centers come online, what will the energy mix be?

The biggest source of electric generation today in the U.S. by far is natural gas. So gas will certainly be what’s added the fastest. We’re doing everything we can to get conventional nuclear power moving as quickly as we can. We’ll have lots of those plants under construction in the next 12 to 24 months, but that’s years until they’re electrons on the grid.

Solar technologies continue to advance. I think we’ll see continued development of solar even without the subsidies. 33 years of wind subsidies, that should be enough. These technologies should fly on their own. But they’re probably not a large contributor of new AI capacity.

What would you say to critics who contendthat there were a lot of wind and solar projects coming online, but that the administration’s funding cuts kneecapped them?

First, none of them are gone right now. We’ve finally got legislative processes so the subsidies will go away. If you start construction before next July, you’ll get those subsidies.

But then people say, “We need more electrons on the grid.” I say to people, there’s not a swimming pool in the back that stores electrons. What we need is more capacity at peak demand times. That’s all that matters. When it’s the middle of the night and the wind blows really hard in Iowa, that doesn’t do anything to increase the capacity of our grid.

[Note: There are ongoing efforts to improve battery energy storage systems for renewables to address this problem. TIME has written about these efforts over the years.]

Sam Altman says that he believes “a lot of the world gets covered in data centers over time.” Do you support that vision?

I think they’re tremendously beneficial. The highest use of energy is heat. So many people die of the cold. We’ve changed the history of the planet by keeping people warm. Maybe the second-highest use of energy is going to be to generate intelligence. So I think it’s an awesome use of it.

Anything new that moves fast draws public opposition, some of it for good reason. If a data center goes into your community and your electric prices go up, you don’t like that. I wouldn’t like it either.

So we do have a delicate balance. We have to build these data centers and supply energy and power to them, but stop the price rises. If hyperscalers need a gigawatt for their data center, they’ve got to bring us over a gigawatt of new power capacity, and they’ve got to pay rates that help stop the price rise. That hasn’t always been done.

Climate activists lament the fact that big corporations are quietly walking back their net-zero goals. Does that concern you?

No. I think the public has a very unrealistic view of climate change, like it’s the biggest problem in the world today. If you look at the data on climate change, it isn’t remotely close to the world’s biggest problem compared to starvation, public health, education, free trade.

[Note: The WEF’s Global Risks Report 2025 listed a variety of risks as more severe than climate-related ones over a two-year time period. But when considering a 10-year time period, all of their most severe risks were climate-related.]

Would you agree that this AI race has drastically increased our need for energy production?

AI is a contributor to rising demand for electricity. The U.S. has got to get our act together and start growing our electricity production. But is it a mover in global energy demand? You know, not a lot. But even if it was, would I still be in favor? Absolutely. We’re going to make humans more efficient, more secure, more safe, more wealthy.

What to Know: Rapid AI acceleration

AI capabilities are “doubling every eight months” in some domains, according to a major study by the U.K. AI Security Institute published yesterday. The U.K. government body, which conducts empirical studies on frontier AI models ahead of their public release, recorded rapid advancements in biological and cyber capabilities. The report also found that AI companies’ safeguards to stop dangerous model behaviors were improving rapidly—with the time required for experts to discover a jailbreak rising from 10 minutes to more than seven hours.

Rapid change — “We are seeing really rapid capability improvement across basically all domains that the AI Security Institute has measured,” Jade Leung, the AISI’s Chief Technology Officer, told reporters on Wednesday. “In many domains, frontier systems are now matching, or indeed far surpassing human experts.”

Loss of control — AISI researchers also tested models for “precursor behaviors” that might indicate the ability of a model to escape the control of its human operators, for example the ability to self-replicate, access their own model weights, and obtain computing power. On a benchmark comprising 20 of these tasks, models have progressed from 5% success rate in 2023 to more than a 60% success rate in the summer of 2025, according to the report. Leung said that all of these tests required specific prompting by researchers, and that no models exhibited “concerning” tendencies spontaneously. Still, she said, “the indicators are important to track as we move toward more advanced systems over time.”

AI companionship — The report also includes new numbers on the extent of human-AI relationships among U.K. adults. 33% of the more than 2,000 people surveyed said they had used AI models for “companionship, emotional support, or social interaction” in the past year, including 4% who said they did so every day. Of these, general-purpose assistants like ChatGPT were the most common form of model used, followed by voice assistants. Models designed specifically for companionship, like Character.AI, comprised only 5%. — Billy Perrigo

AI in Action

This week, Google quietly rolled out its latest model, Gemini 3 Flash, inside Google Search and other platforms. Experts say that it is smarter, faster and cheaper than past models. It would be bad news for OpenAI if Google can reliably offer high-performing, low-cost models, since Gemini already comes built into Chrome and Google Search.

What We’re Reading

“Podcast industry under siege as AI bots flood airways with thousands of programs,” Nilesh Christopher, LA Times

I’ve experienced this personally: I’ve searched my podcasts app to learn about a specific topic, chosen the top option, and realized only 10 minutes in that I was listening to AI hosts, based on the stiffness of their banter and prose. Hundreds of thousands of AI-generated podcasts have flooded the apps—and the “invasion has just begun,” writes Christopher.

The post Why Trump’s Energy Secretary Wants Data Centers to Cover the U.S. appeared first on TIME.