If you’re even minimally attuned to the zeitgeist, you’ve heard the term “AI slop.” It’s a generalized disdain for almost anything generated by “artificial intelligence,” as though the involvement of AI at all renders the product suspect, even dangerous.

It’s easy to say that AI slop is everywhere. “AI slop is clogging your brain,” warns NPR.

The problem with this complaint is that almost no one makes the effort to define “AI slop.” Is it anything produced with AI? Or any such content appearing in social media? AI images produced for commercial advertising? Any such content made to deceive? Or only content made to deceive politically?

Plainly, what’s needed is a taxonomy of AI slop: which manifestations are merely nuisances, which are actually entertaining, which are threats to social or political order. There’s a range stretching from jokey but obvious fabrications all the way up to the use of AI to put words in the mouths of politicians to sway voters via deceit.

So it’s time to really take the measure of AI slop: which of it is deceptive, which amusing and which merely an avoidable nuisance.

It’s certainly true, as my colleague Nilesh Chistopher reports, that tools such as OpenAI’s Sora video app has reduced the cost of generating unauthorized deepfakes of celebrities, dead figures and copyrighted characters to almost nothing. Sora’s rapid uptake by users, one expert told Christopher, “erodes confidence in our ability to discern authentic from synthetic.”

But that’s the higher level of developments causing genuine concern. Much of the hand-wringing about AI slop focuses on material that may be ubiquitous but innocuous.

Here, for example, is critic and blogger Ted Gioia on AI-generated art: “Slop art is flat, awkward, stale, listless, and often ridiculous. Slop works are celebrated for their stupidity and clumsiness — which are often amplified by strange juxtapositions of culture memes.”

Gioia’s purpose was to analyze “the new aesthetics of slop,” but what strikes me about his critique is its familiarity. Substitute “pop art” or “op art” for “slop art,” and one could almost be reading a screed from a mainstream critic of the 1950s or ‘60s — Clement Greenberg, for one.

That’s not to say that the creators of AI-generated images are artists with the commitment and talent of those earlier figures, only that every era brings us a creative environment that many people find dull, stupid, clumsy, etc., etc.

Nor is it unusual for new forms of expression to result from technological advances. “Every media revolution breeds rubbish and art,” writes Deni Ellis Béchard of Scientific American. “Spam, fluff, clickbait, churnalism, kitsch — slop: These are all ways to describe mass-produced, low-quality content.”

Béchard offers a very long historical perspective of this phenomenon, starting with Gutenberg, whose invention of movable type — “the ChatGPT of the 1450s,” he asserts — ushered in “the mass production of cheap printed material.” The early 1700s brought us “Grub Street,” cheap printed material such as “satires, political tracts, sensational stories and hack journalism. … Some of this material was drivel, sure, but much of it entertained and educated the masses.”

The tsunami of AI slop and the attendant hand-wringing reminds us, indeed, that “there is nothing new under the sun,” as the Book of Ecclesiastes put it.

Some hand-wringing seems overwrought. There’s the backlash over Coca-Cola’s new AI-generated commercial depicting woodland creatures gathering to celebrate a line of trucks delivering holiday supplies of Coke the world over.

The tech website CNET contends that the ubiquity of this ad “is a sign of a much bigger problem,” though it’s not entire clear about what it thinks the problem is. Among its cavils are that the AI-produced images have flaws that would have been caught by human animators, and also that Coke hasn’t accommodated “its customers’ aversion to AI.”

But I haven’t seen much evidence of the average person’s “aversion” to AI in commercials. In any event, Coke does acknowledge, with a caption at the very start of the commercial, that it’s AI-created.

Coke has addressed questions about its use of AI by stating that “human creativity remains at the heart of Coca‑Cola advertising. … We’re committed to using AI as a human enabler, where it makes sense,” said Pratik Thakar, the company’s AI chief, in a company web post.

It’s true that resorting to AI can lower costs and reduce the time needed to produce any given content. That points to job losses among creative professionals, an issue that has concerned actors, writers and artists in Hollywood, and others in journalism, where AI-generated factual errors are a serious problem.

But if the concern is that as AI production gets ever more lifelike as it pervades the commercial space, viewers will be deceived into thinking that what they see on-screen is real, that doesn’t seem likely. It’s doubtful that viewers will think that an image of ostriches driving a delivery truck is for real, any more than they thought that polar bears embracing one another, Coke bottles in hand, was real in the 1990s, when Coke ads exploited computer-generated imagery, when that was the coming thing.

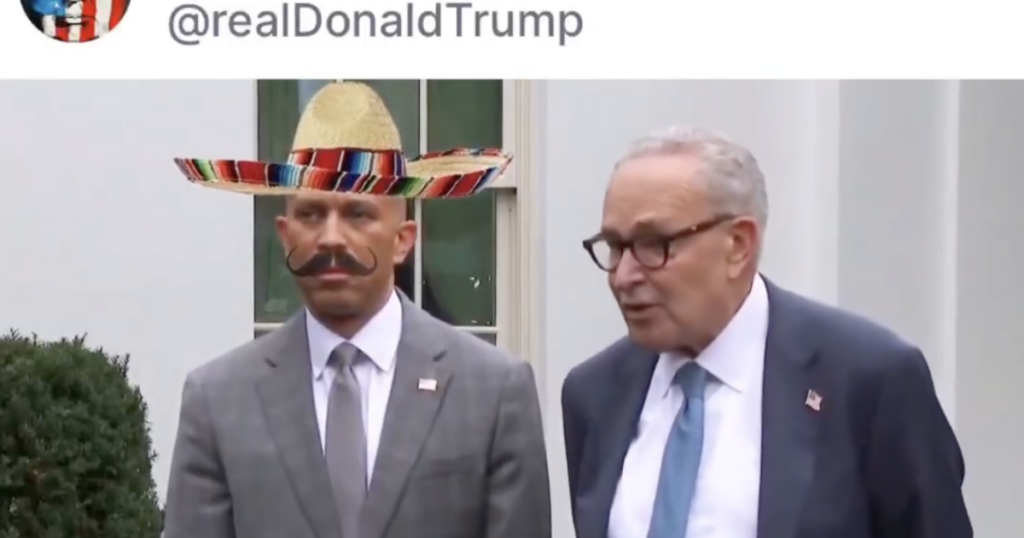

None of this means that AI slop is invariably innocuous. The variety that should most concern us is propaganda — political propaganda, to be precise. In the run-up to the 2024 election, AI-generated partisan deepfakes proliferated on the web.

One was a video purporting to show President Biden denigrating transgender women. “You will never be a real woman,” the deepfaked Biden says. “You have no womb, you have no ovaries, you have no eggs. You’re a homosexual man twisted by drugs and surgery into a crude mockery of nature’s perfection.” The video closes with “Biden” implying that suicide may be the inevitable recourse for these women.

The video was so crude that it was quickly exposed as fake; even conservatives sounded the alarm. “While the audio is spot on for Joe Biden’s voice and cadence (save for the usual stumbles in speech), the mouth movements are far from convincing,” wrote Matt Margolis of PJ Media. “Nevertheless, it’s the stunning realism of Deepfake Biden’s voice that is actually terrifying here. Without the accompanying video, it would be easy to conclude the speech is legitimately Joe Biden speaking, and it raises major concerns about how this technology can be used for nefarious purpose.”

Another deepfake was posted by the presidential campaign of Florida Gov. Ron DeSantis. This one tried to tie Donald Trump, whom DeSantis was challenging for the GOP nomination, to Dr. Anthony Fauci, the government epidemiologist DeSantis had tried to demonize as a perpetrator of forced COVID vaccination. The deepfake depicted Trump embracing Fauci.

The DeSantis campaign, which would collapse shortly thereafter, defended the video by asserting that it was merely a social media post, not an official campaign advertisement. Twitter, where the video had been posted initially, eventually appended a label identifying it as an AI fake.

In the heat of an election campaign, when deliberate deception can often be a path to victory, AI-generated fakes might well find their marks. “While election disinformation has existed throughout our history, generative AI amps up the risks,” the Brennan Center for Justice at NYU Law School warned last year. “It changes the scale and sophistication of digital deception and heralds a new vernacular of technical concepts related to detection and authentication that voters must now grapple with.”

When the latest cycle of AI technology was in its infancy, deepfakes were relatively easy to identify. Guides to spotting fakes were widely published, based on the technology’s inability to get certain things right, producing images of hands with six fingers, hair and feet that looked unnatural, and shadows falling in the wrong place.

As the technology improves, these artifacts disappear, giving them “greater potential to mislead would-be sleuths seeking to uncover AI-generated fakes,” the Brennan Center noted. Last year it published advice on how to spot fakes in the modern AI era — seek out “authoritative context from credible independent fact-checkers,” and “approach emotionally charged content with critical scrutiny, since such content can impair judgment and make people susceptible to manipulation.”

Of course, the purveyors of political deepfakes aim to short-circuit exactly those critical faculties, so advising people not to be deceived, especially when voters are inundated with campaign slop before an election, doesn’t feel like an effective safeguard.

It’s possible that over time, AI slop will become its own worst enemy. AI-generated entertainment content has failed to grip audiences, other than consumers of the most generic content. Even in those cases, the AI content is basically a pastiche of human creation. That happened when an AI-generated country song reached No. 1 on Billboard’s country digital sales chart last month. The song’s vocal style and other creative elements, however, were drawn from a real artist, Blanco Brown, who had no idea of its creation.

That suggests that the downfall of AI slop may not be consumer aversion, but ennui. The goal of entertainment content is for audiences to connect with human creativity, something that AI is not capable of replicating, except superficially.

As the technology becomes so cheap that anyone can use it, we may become deluged by a wave of low-quality noodling. The challenge will become winnowing out the innocuous junk from the truly dangerous slop.

The post Everyone complains about ‘AI slop,’ but no one can define it appeared first on Los Angeles Times.