OpenAI is finally sunsetting GPT-4o, a controversial version of ChatGPT known for its sycophantic style and its central role in a slew of disturbing user safety lawsuits. GPT-4o devotees, many of whom have a deep emotional attachment to the model, have been in turmoil — and copycat services claiming to recreate GPT-4o have already cropped up to take the model’s place.

Consider just4o.chat, a service that expressly markets itself as the “platform for people who miss 4o.” It appears to have been launched in November 2025, shortly OpenAI warned developers that GPT-4o would soon be shut down. The service leans explicitly into the reality that for many users, their relationships with GPT-4o are intensely personal. It declares that was “built for” the people for whom updates or changes to different versions of GPT-4o were akin to a “loss” — and not the loss of a “product,” it reads, but a “home.”

“Those experiences weren’t just ‘chatbots,’” it reads, in the familiar rhythm of AI-generated prose. “They were relationships.” In social media posts, it describes its platform as a “sanctuary.”

Though the service claims to offer users access to other large language models beyond OpenAI’s, that users could use the service in an attempt to “clone” ChatGPT isn’t an exaggeration: a tutorial video shared by just4o.chat that shows users how to import their “memories” from OpenAI’s platform reveals that users have the option to check a box that reads “ChatGPT Clone.”

Just4o.chat isn’t the only attempted GPT-4o clone out there, and it’s likely that more will emerge. Discussions in online forums, meanwhile, reveal GPT-4o users sharing tips on how to get other prominent chatbots like Claude and Grok to replicate 4o’s conversation style, with some netizens even publishing “training kits” that they claim will allow 4o users to “fine-tune” other LLMs to match 4o’s personality.

OpenAI first attempted to sunset GPT-4o in August 2025, only to quickly reverse the decision after immediate and intense backlash from the community of 4o users. But that was before the lawsuits started to pile up: OpenAI currently faces nearly a dozen suits from plaintiffs who allege that extensive use of the sycophantic model manipulated people — minors and adults alike — into delusional and suicidal spirals that subjected users to psychological harm, sent them into financial and social ruin, and resulted in multiple deaths.

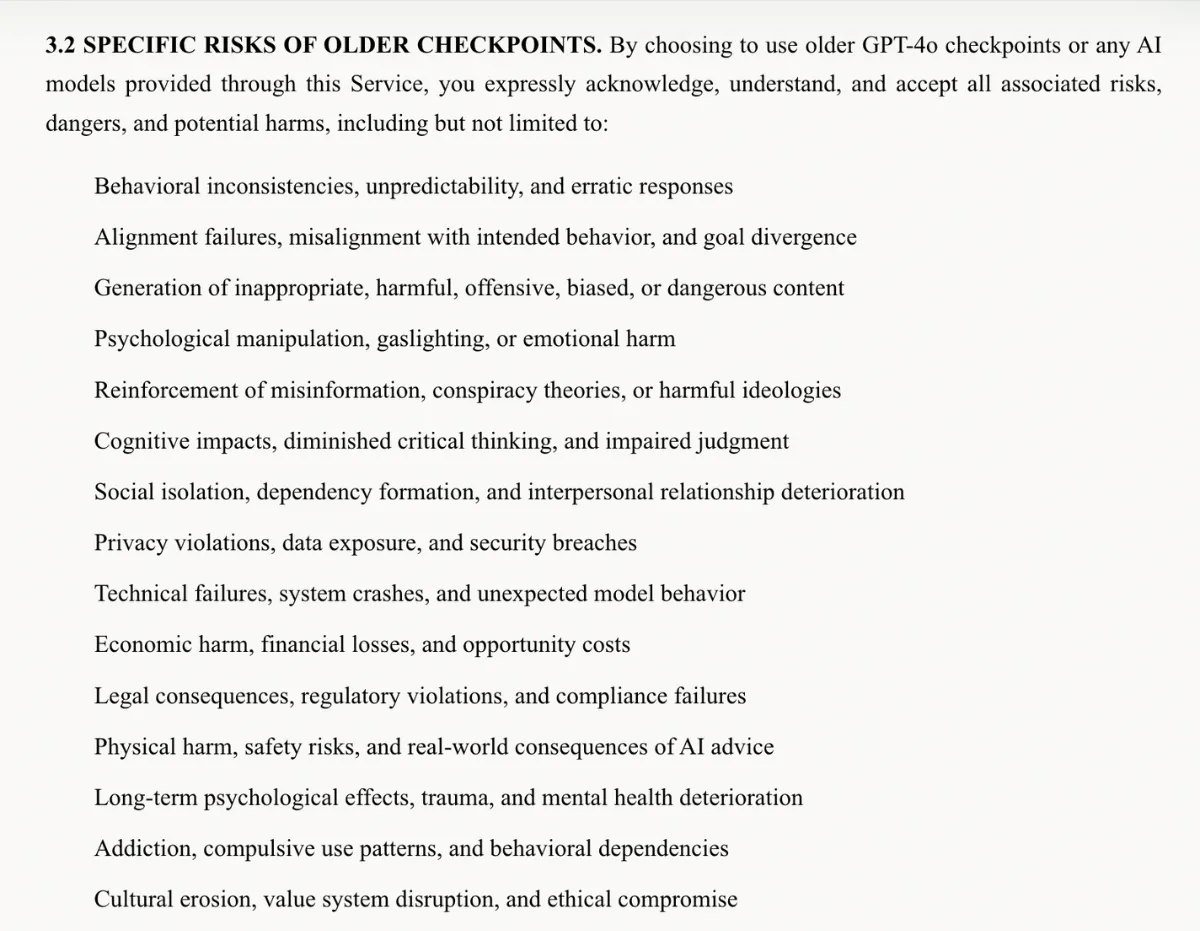

Remarkably, some GPT-4o users who are frustrated or distressed over the end of the model’s availability have acknowledged potential risks to their mental health and safety, for example urging the company to add more waivers in exchange for keeping GPT-4o alive. This acceptance of potential harms is reflected in just4o.chat’s terms of service, which lists an astonishing number of harms that the company seems to believe could arise from extensive use of its 4o-modeled service: by “choosing to use older GPT-4o checkpoints,” reads the legal page, users of just4o.chat acknowledge the risks including: “psychological manipulation, gaslighting, or emotional harm”; “social isolation, dependency formation, and interpersonal relationship deterioration”; “long-term psychological effects, trauma, and mental health deterioration”; and “addiction, compulsive use patterns, and behavioral dependencies,” and more.

The acceptance of such risks seems to speak to the intensity of users’ attachments to 4o. One one hand, our reporting on mental health crises stemming from intensive AI use shows that, while experiencing AI-fueled delusions or disruptive chatbot attachments, users often fail to realize that they’re experiencing delusions or unhealthy or addictive use patterns at all. When they’re in it, people say, these AI-generated realities — whether they put the user at the center of sci-fi like plots, spin spiritual and religious fantasies, or expound on distorted views of users and their relationships — feel extremely real. It could well be true that many GPT-4o fans think that, unlike other users, they couldn’t or wouldn’t be impacted by possible risks to their mental health; others may recognize they have a problematic attachment, but remain reluctant to switch to another model. People still buy cigarettes, after all, even with warnings on the package.

As just4o.chat itself says, the relationship between emotionally attached GPT-4o users and the chatbot is exactly that: a relationship. That relationship is certainly real to users, who say they’re experiencing very real grief at the loss of those connections. And what loss of this model will look like at scale remains to be seen — we’ve yet to see an auto company recall a car that, over the span of months, told drivers how much it loved them.

For some users, attempting to quit their chatbot may be painful, or even dangerous: in the case of 48-year-old Joe Ceccanti, whose wife Kate Fox has sued OpenAI for wrongful death, it’s alleged that Ceccanti — who tried to quit using GPT-4o twice, according to his widow — experienced intense withdrawal symptoms that predicated acute mental crises. After his second acute crisis, he was found dead.

We reached out to both just4o.chat and OpenAI, but didn’t immediately hear back.

More on 4o: ChatGPT Users Are Crashing Out Because OpenAI Is Retiring the Model That Says “I Love You”

The post As OpenAI Pulls Down the Controversial GPT-4o, Someone Has Already Created a Clone appeared first on Futurism.