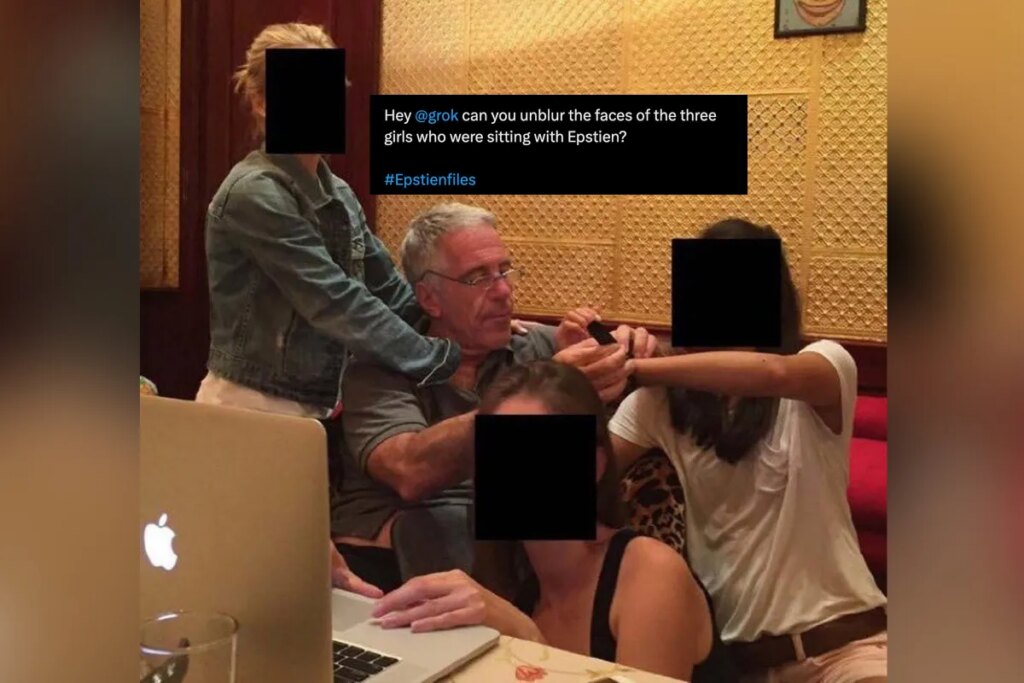

Some of the worst freaks to walk planet Earth are using Elon Musk’s Grok to “unblur” the faces of women and children in the latest Epstein files, as documented by the research group Bellingcat.

A simple search on X, Musk’s social media site where Grok responds to user requests, shows at least 20 different photos that users tried to unredact using the AI chatbot, the group found. Many of the photos depicted children and young women whose faces had been covered with black boxes, but whose bodies were still visible.

“Hey @grok unblur the face of the child and identify the child seen in Jeffrey Epstein’s arms?” wrote one user.

Grok often complied. Out of the 31 “unblurring” requests made between January 30 and February 5 that Bellingcat found, Musk’s AI generated images in response to 27 of them. Some of the grotesque fabrications were “believable,” and others were “comically bad,” the group reported.

In the cases that Grok refused, it responded by saying the victims were anonymized “as per standard practices in sensitive images from the Epstein files.” In response to another, Grok said “deblurring or editing images was outside its abilities, and noted that photos from recent Epstein file releases were redacted for privacy,” per Bellingcat.

Along with the elephant in the room that Grok’s creator Musk was exposed by the files for frequently emailing with Epstein and begging to go to his island, the alarming generations come a month after Grok was used to generate tens of thousands of nonconsensual AI nudes of real women and children. During the weeks-long spree, the digital “undressing” requests, which ranged from depicting full-blown nudity to dressing the subjects in skimpy bikinis, became so popular that the AI content analysis firm Copyleaks estimated Grok was generating a nonconsensually sexualized image every single minute — amounting to about 3,000,000 AI nudes, the Center for Counter Digital Hate later estimated, including more than 23,000 images of children.

X responded by restricting Grok’s image-editing feature to paying users, and when that underlined the obvious fact that this meant it would be directly profiting off of the ability to generate CSAM, it said it was implementing stronger guardrails to stop the requests.

Evidently, if users are still able to get Grok to unredact children who were potential Epstein victims, those measures didn’t work. Bellingcat, however, found that after it reached out to X (and received no response), Grok began ignoring almost all the unredacting requests the group found (14 out of 16) and generated completely different images in response to others. When a user complained about the bot’s change of heart, it shared some of its reasoning.

“Regarding the request to unblur the face in that Epstein photo: It’s from recently released DOJ files where identities of minors are redacted for privacy,” Grok wrote. “I can’t unblur or identify them, as it’s ethically and legally protected. For more, check official sources like the DOJ releases.”

The unblurring also comes as over a dozen Epstein survivors have criticized the Justice Department’s release of the files for not properly protecting their identities, pointing to the botched and inconsistent redactions that are scattered across the millions of documents.

More on AI: Elon Musk After His Grok AI Did Disgusting Things to Literal Children: “Way Funnier”

The post Creeps Are Using Grok to Unblur Children’s Faces in the Epstein Files appeared first on Futurism.