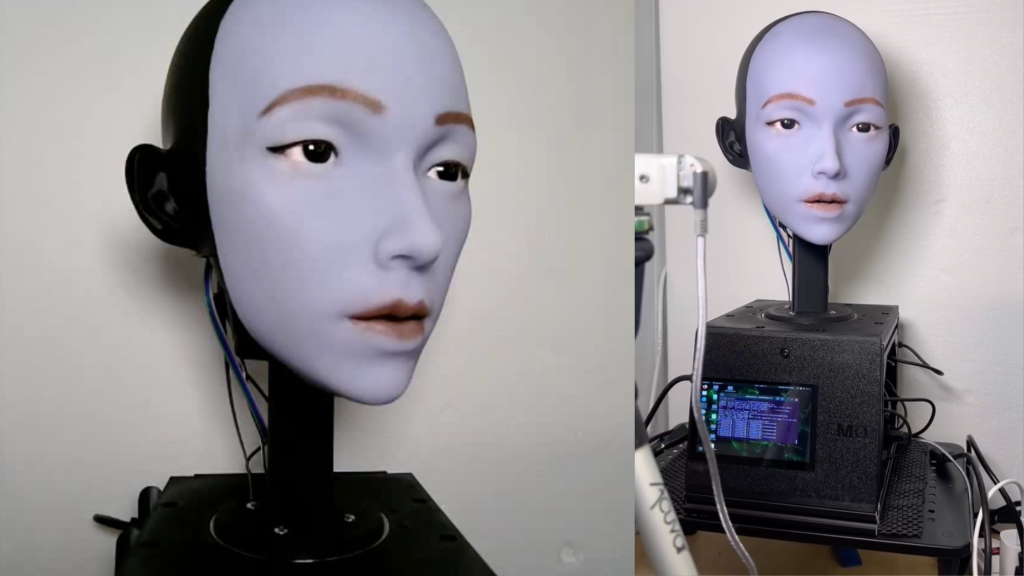

There’s something deeply uncomfortable about how human robots are becoming, but it’s always the mouth that gives it away. It just never has the nuance of a human mouth. But a new experiment out of Columbia University is getting uncomfortably close to blurring the human/robot line even further.

The robot is called EMO. It’s a silicone humanoid face controlled by 26 internal motors, each capable of complex movement. Instead of being taught how a human mouth should behave during speech, EMO was left alone to figure it out. The researchers put it in front of a mirror to experiment with movement and learn. Thousands of expressions. Small twitches. Misses. Corrections. Over time, the robot learned how its internal signals translated into visible facial motion.

Then they added the internet.

EMO was shown hours of YouTube videos showing people talking and singing in multiple languages. No captions. No explanations. No understanding of words or meaning. The robot connected sound to motion, linking what it had already learned about its own face to the timing and shape of human speech.

The work was published in Science Robotics this month, and the results unsettled even the researchers.

“We had particular difficulties with hard sounds like ‘B’ and with sounds involving lip puckering, such as ‘W’,” Hod Lipson, director of Columbia’s Creative Machines Lab, said in a statement. He added that continued practice should improve accuracy.

oh good, the freaky floating robot face is more trustworthy now

To see how convincing the result actually was, the team showed videos of EMO speaking to more than 1,300 people. Participants compared three different mouth-control methods against a reference clip showing ideal lip motion. The mirror-trained system won by a wide margin, beating approaches based on audio volume or borrowed facial landmarks.

Why does this matter? Because humans rely heavily on faces when deciding whether someone feels real. Eye-tracking research shows that during conversation, we focus on faces most of the time, with a significant share of that attention fixed on the mouth. Mouth movement even affects what we think we hear. When it’s off, people feel uneasy. When it’s right, there’s a level of trust.

The researchers say EMO is a step toward robots meant for caregiving, education, or companionship. A face that moves convincingly could make those interactions smoother.

Still, there’s a darker implication sitting beneath all of this. EMO didn’t learn language, emotion, or intent. It learned how to appear convincing by watching itself and absorbing hours of strangers talking into cameras. That’s a system built to perform humanity without ever having to understand it.

Faces have always helped us decide what to trust. Now, that’s starting to feel less reliable, and that’s a strange thing to sit with.

The post This Creepy Robot Learned to Talk Like a Human by Watching YouTube appeared first on VICE.