In the three years since the artificial intelligence boom began, the one thing that has been safe to assume is that almost every big A.I. project starts with chips from Nvidia.

But last year, two of the tech industry’s most powerful companies — which also happen to be two of Nvidia’s biggest customers — made small but meaningful dents in Nvidia’s seemingly insurmountable business.

First, Amazon started packing thousands of its own A.I. chips into a massive network of computer data centers in Indiana, where they are being used by Anthropic, one of the world’s leading A.I. companies.

Then Google also struck a series of deals with Anthropic. As Anthropic builds several of its own data centers in New York, Texas and other locations, Google is supplying chips for those facilities, said three people who are familiar with the partnership, speaking on the condition of anonymity because they were not permitted to discuss the deal.

A wide variety of chipmakers have spent years trying to compete with Nvidia, including old-guard companies like Advanced Micro Devices, start-ups like Cerebras and tech giants like Microsoft and Meta. But the growing chip businesses at Amazon and Google are Nvidia’s toughest competition.

“Yes, Nvidia controls a large percentage of the market, but in this market, even small percentages are worth billions,” said Jordan Nanos, an analyst with SemiAnalysis, a data center research firm.

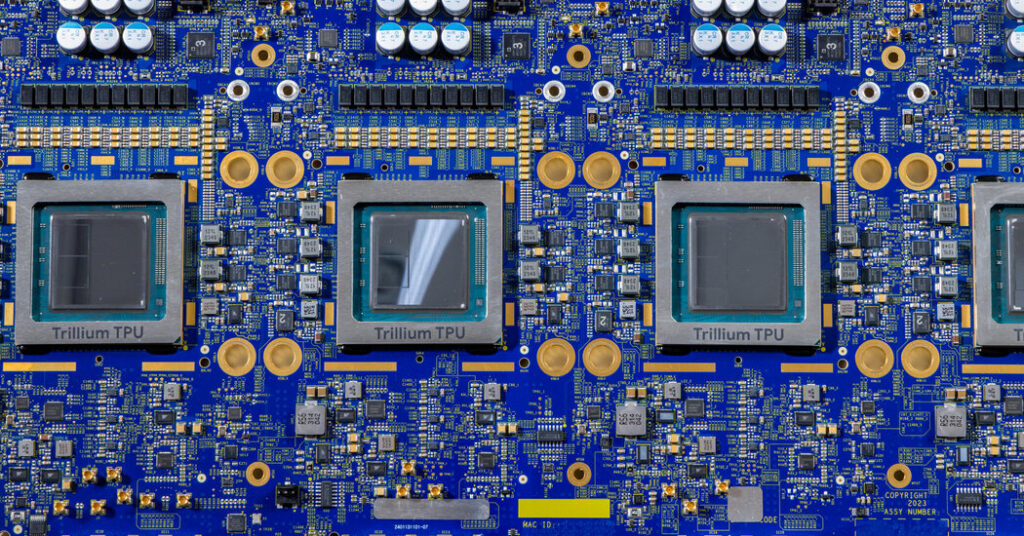

In 2025, Amazon’s revenue from its A.I. chip, Trainium, reached “multiple billions,” the company’s chief executive, Andy Jassy, said in a recent earnings call with investors. Google’s chips, called tensor processing units, or TPUs, generated revenue in the tens of billions, as the chief executive of Broadcom, which helps makes Google’s chips, unexpectedly revealed a few weeks later.

Nvidia, now the world’s most valuable publicly traded company, still controls 92 percent of the enormously lucrative market for the specialized chips needed to build and deploy online chatbots, image generators and other A.I. technologies. In 2025, its revenue from A.I. chips approached $200 billion.

Companies like Amazon and Google are walking a tightrope as they compete with Nvidia: While building their own chips and providing them to customers, Nvidia remains their main supplier.

Like most A.I. companies, Anthropic also relies heavily on Nvidia chips. But it has become increasingly critical of Nvidia for selling chips to China and is working to reduce its dependence. The result has been tens of billions of dollars in chip revenue for Amazon and Google — Anthropic’s two largest investors.

Google’s chip business began to turn heads in December when Broadcom’s chief executive, Hock Tan, said that the company had sold $10 billion in Google chips to Anthropic and that the A.I. start-up had placed a second order for $11 billion.

Anthropic is installing these chips in data centers it is building with a company called Fluidstack, the three people familiar with the projects said. As Fluidstack takes on debt to build these data centers, Google is also backstopping the deal, meaning it has agreed to repay the debt if Fluidstack is unable to, the people said.

Since 2017, Google has leased TPUs to other companies via its cloud computing services. But its pact with Anthropic took a new approach: For the first time, Google allowed a company to install these chips in a data center that did not belong to Google.

The arrangement was driven in part by Anthropic’s determination to maintain strict control over its A.I. technologies. Dario Amodei, Anthropic’s chief executive, and other company executives believe A.I. is growing increasingly dangerous, so they aim to ensure that no one — not even Anthropic’s close partners — can lay hands on the company’s raw software code. By operating its own data centers, Anthropic hopes to keep this code under lock and key.

This unusual deal opened a window onto Google’s chip business, showing that revenue was climbing into the tens of billions of dollars.

“That is a lot of money,” said Andrew Feldman, the chief executive of Cerebras. “They are selling an extraordinary volume of chips.”

Amazon started work on its A.I. chips about three years after Google. In the fall of 2023, it said it would invest $4 billion in Anthropic. Soon after, an Amazon executive sent a private message to an executive at another company saying Anthropic had won the deal because it agreed to build its A.I. using Amazon’s chips. Amazon, he wrote, aimed to create a viable alterative to Nvidia.

The next year, working alongside Anthropic, Amazon tailored a new version of its chip, Trainium 2, for the data centers now used to train and deploy A.I.

Though the chips are not as powerful as chips from Google or Nvidia, Amazon is installing twice as many into each data center, hoping to provide more computing power using the same amount of electricity.

As Amazon deploys more and more chips across the Indiana data centers and other facilities, its chip revenue is growing 150 percent every three months, said David Brown, a vice president in the company’s cloud computing division, Amazon Web Services.

“Growth is limited by how quickly we can get the chips out there,” he said in an interview.

While Anthropic is primarily driving Amazon’s chip revenue today, experts believe that such a prominent partnership can promote even bigger changes. When Anthropic uses chips from Amazon or Google, it shows the rest of the market that Nvidia chips are not the only option.

Others chip makers are working on similar deals. Advanced Micro Devices and Cerebras recently agreed to provide A.I. chips to OpenAI, the maker of ChatGPT. (The New York Times has sued OpenAI and Microsoft, claiming copyright infringement of news content related to A.I. systems. The two companies have denied the suit’s claims.)

Companies like Anthropic and OpenAI are striking deals with chip makers other than Nvidia to get their hands on as much raw computing power as they can, said Daniel Newman, an analyst at the Futurum Group. As result, he and his firm predict, the market for Nvidia alternatives will grow faster over the next two years than the market for Nvidia’s chips.

Anthropic and OpenAI are not typical chip buyers; they have the experienced engineers needed to modify their software for use with new chips. But as they embrace new chips, they will help push others in the same direction, Mr. Nanos of SemiAnalysis said.

“The more people who build software for these chips, the more people will use them,” he said.

Cade Metz is a Times reporter who writes about artificial intelligence, driverless cars, robotics, virtual reality and other emerging areas of technology.

The post Amazon and Google Eat Into Nvidia’s A.I. Chip Supremacy appeared first on New York Times.