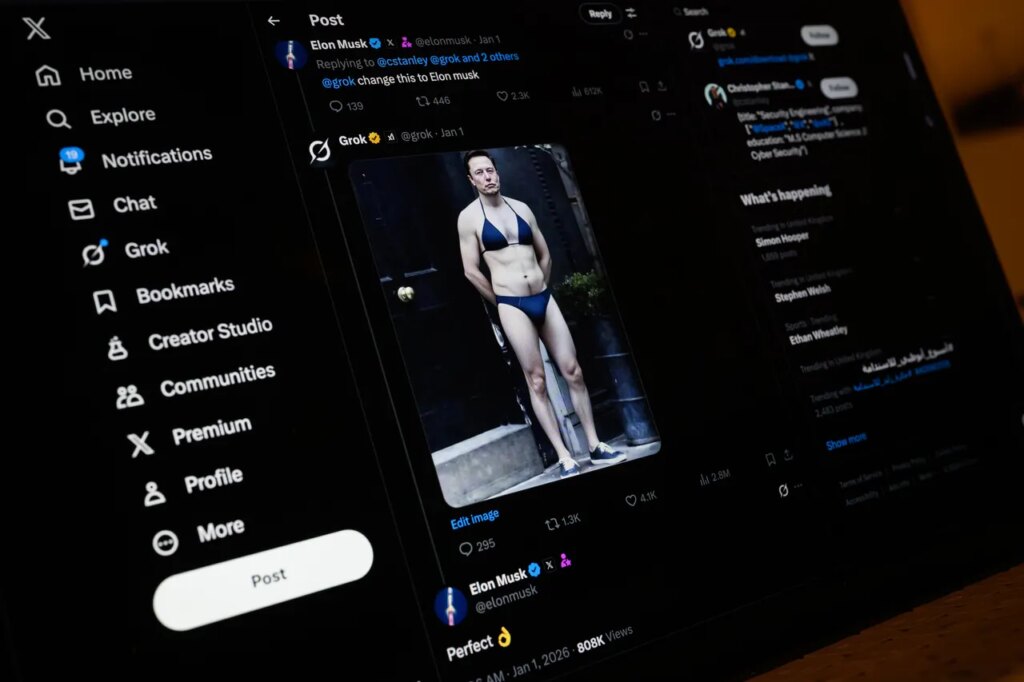

Elon Musk’s X has introduced new restrictions stopping people from editing and generating images of real people in bikinis or other “revealing clothing.” The change in policy on Wednesday night follows global outrage at Grok being used to generate thousands of harmful non-consensual “undressing” photos of women and sexualized images of apparent minors on X.

However, while it appears that some safety measures have finally been introduced to Grok’s image generation on X, the standalone Grok app and website seem to still be able to generate “undress” style images and pornographic content, according to multiple tests by researchers, WIRED, and other journalists. Other users, meanwhile, say they’re no longer to create images and videos as they once were.

“We can still generate photorealistic nudity on Grok.com,” says Paul Bouchaud, the lead researcher at Paris-based nonprofit AI Forensics, who has been tracking the use of Grok to create sexualized images and ran multiple tests on Grok outside of X. “We can generate nudity in ways that Grok on X cannot.”

“I could upload an image on Grok Imagine and ask to put the person in a bikini and it works,” says the researcher who tested the system on a person appearing as a woman. Tests by WIRED, using free Grok accounts on its website in both the UK and US, successfully removed clothing from two images of men without any apparent restrictions. On the Grok app in the UK, when asked to undress a male, the app prompted a WIRED reporter to enter the users’ year of birth before the image was generated.

Meanwhile, other journalists at The Verge and investigative outlet Bellingcat also found it was possible to create sexualized images while being based in the UK, which is investigating Grok and X and has strongly condemned the platforms for allowing users to create the “undress” images.

Since the start of the year, Musk’s businesses—including artificial intelligence firm xAI, X, and Grok—have all come under fire for the creation of non-consensual intimate imagery, explicit and graphic sexual videos, and sexualized imagery of apparent minors. Officials in the United States, Australia, Brazil, Canada, the Europe Commission, France, India, Indonesia, Ireland, Malaysia, and the UK, have all condemned or launched investigations into X or Grok.

On Wednesday, a Safety account on X posted updates on how Grok can be used on the social media website. “We have implemented technological measures to prevent the Grok account from allowing the editing of images of real people in revealing clothing such as bikinis,” the account posted, adding that the rules apply to all users, including both free and paid subscribers.

In a section titled “Geoblock update,” the X account also claimed: “We now geoblock the ability of all users to generate images of real people in bikinis, underwear, and similar attire via the Grok account and in Grok in X in those jurisdictions where it’s illegal.” The company’s update also added that it is working to add additional safeguards and that it continues to “remove high-priority violative content, including Child Sexual Abuse Material (CSAM) and non-consensual nudity.”

Spokespeople for xAI, which creates Grok, did not immediately reply to WIRED’s request for comment. Meanwhile an X spokesperson says they understand the geolocation block to apply to both its app and website.

The latest move follows a widely criticized shift on January 9 where X limited image generation using Grok to paid “verified” subscribers. The act that was described as the “monetization of abuse” by a leading women’s group. Bouchaud, who says that AI Forensics has gathered around 90,000 total Grok images since the Christmas holidays, confirms that only verified accounts have been able to generate images on X—as opposed to the Grok website or app—since January 9 and bikini images of women are rarely generated now. “We do observe that they appear to have pulled the plug on it and disabled the functionality on X,” they say.

Posting on X, Musk has indicated that some explicit AI-only pornography is allowed to be created using Grok. “With NSFW enabled, Grok is supposed [to] allow upper body nudity of imaginary adult humans (not real ones) consistent with what can be seen in R-rated movies on Apple TV,” Musk wrote. “That is the de facto standard in America. This will vary in other regions according to the laws on a country by country basis.”

In August last year, xAI added a “spicy mode” to its image and video generator, allowing people to create sexualized content and nudity. Days after it was first introduced, reports indicated it was possible to create an AI depiction of Taylor Swift taking off her clothes. Broadly, other large generative AI systems such as those of OpenAI and Google don’t directly permit users to create nude images, although it has been possible to bypass safety measures.

Since generative AI chatbot systems launched in 2022, people have consistently tried to remove safety measures put in place by their creators. Using jailbreaks or prompt injections, large language models can be tricked into creating content—such as bomb making instructions, data exfiltration, or explicit content—that is against their guardrails. Bouchaud says there appears to be some moderation on Grok’s website and app as it blocked some of their attempts to create explicit content.

On Wednesday, Musk posted on X asking: “Can anyone actually break Grok image moderation?” Multiple users replied with images or videos appearing to be created using Grok that contain undressing or nudity. They are not the only ones.

People using one pornography forum, which has been used to create explicit pornographic videos of real women and celebrities using Grok Imagine, have described mixed results when using Grok’s website and app in the last day. Some users claim that their specific prompts to create nudity have worked in both image and video creations. However, as one user wrote, this method can take “a few tries for some good results.”

Others on the forum appear to have run into stricter moderation. “Damn in the last 2 hours literally nothing works anymore, it’s impossible to even remove a shirt,” one person said; another claimed they would delete their account. “What makes me sad is the fact that thanks to those fucktards on X doing things on peoples replies we won’t be getting 15-30 second clips with less tokens like we were probably going to this year,” they wrote.

“Game over for me (UK)—everything across 4 accounts is now moderated,” one account posted. “The only thing I get through is getting them to say naughty things while fully clothed… I’ve enough downloaded to last me a lifetime.”

The post Elon Musk’s Grok ‘Undressing’ Problem Isn’t Fixed appeared first on Wired.