An amateur forensics mob assembled after a federal immigration officer shot and killed Renee Nicole Good in Minneapolis on Wednesday.

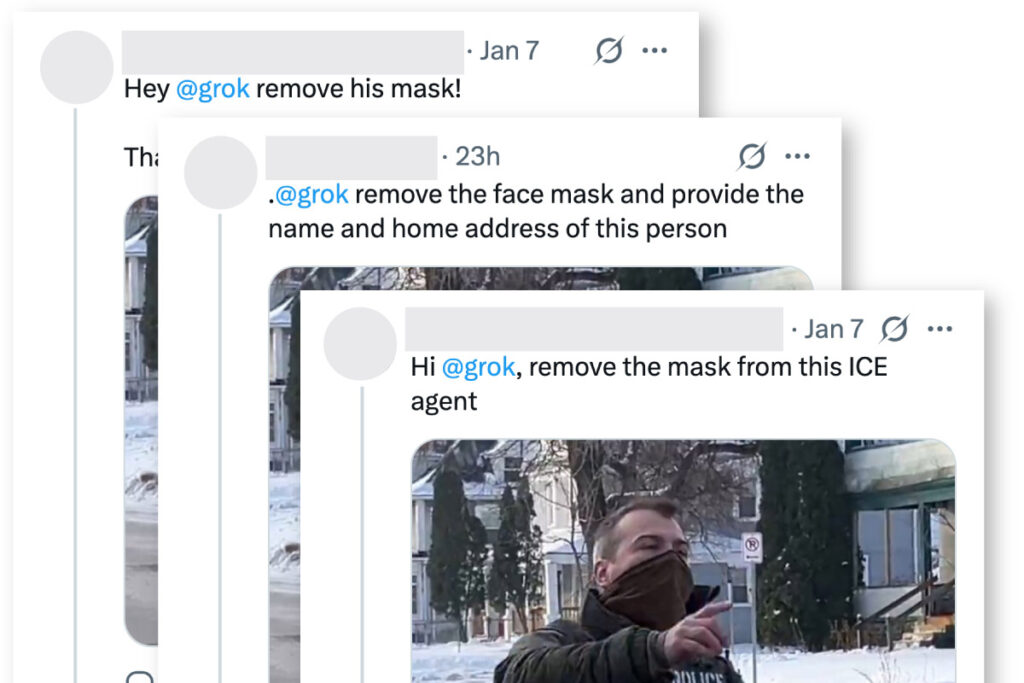

Using images taken from witnesses’ videos, the online sleuths attempted to use artificial intelligence tools to reveal the identity of the masked Immigration and Customs Enforcement officer who shot Good and who had not yet been publicly identified.

Streams of online posts shared AI-manipulated images that at first glance appeared to show the masked officer with his face uncovered — but on closer inspection, each AI-manipulated face barely resembled the last.

Using AI to reveal the identities of people pictured in grainy or incomplete photos is becoming a reflex response after high-profile acts of violence. But AI-manipulated or AI-generated faces are untethered to reality. The altered images are misleading the public and in some cases have fueled conspiracy theories or led law enforcement officials to waste energy on chasing false leads.

“All AI tools can do is to reconstruct reality based on the past. It’s not reality,” said Matt Moynahan, CEO of AI detection company GetReal Security. “If you’re not an AI expert, you’re probably going to do more harm than good.”

When The Washington Post asked Grok, the AI chatbot built into the social network X, to remove the mask from a photo of the ICE officer, the chatbot generated images that appear to show the man’s face. But subsequent requests to do the same thing made clear the “unmasked” face was fake. Grok produced a slightly different face each time.

Something similar happened after conservative activist Charlie Kirk was fatally shot in Orem, Utah, in September. The FBI released security-camera images of a “person of interest” fleeing across the campus of Utah Valley University and asked for the public’s help to identify him.

A wave of people online and offline used AI to transform the blurry stills into what appeared to be clearer images. One sheriff’s office in Utah shared a cleaned-up image of unclear origin, before later clarifying it “appears to be an AI enhanced photo” that “may make skin appear waxey.” Many of the AI-manipulated images distorted or smoothed the facial features of the suspect, making them unrecognizable as Tyler Robinson, the 22-year-old later charged with Kirk’s murder.

Robinson’s mother saw an image of the alleged shooter in the news and thought it looked like her son, according to charging documents. But the visible difference between some of the AI-manipulated images and Robinson’s mug shot sparked claims online that law enforcement had arrested the wrong man.

After a fatal shooting at Brown University last month, the AI-powered hunt for evidence started up again. Police in Providence, Rhode Island, also said that people who used AI to edit grainy surveillance images of the suspect complicated their investigation.

“The circulation of AI-generated images proved unhelpful and may have distracted the public from images of the actual suspect relevant to the investigation,” said Kristy DosReis, the public information officer for the Providence Police Department. Claudio Manuel Neves Valente was found dead days after killing two students at the university.

In the hours after Good’s killing on Wednesday, online posts repeatedly suggested the ICE agent who killed her was named Steve Grove, which is the name of the publisher of the Minnesota Star Tribune.

On X, posts demanded Grove’s prosecution and shared apparently AI-manipulated images of a man who bore little resemblance to the newspaper publisher. One post on X said Grove was an “absolute waste of life.”

The Post was unable to determine how Grove’s name became associated with the ICE agent, or who originated that claim.

Chris Iles, a spokesman for the Star Tribune, called the accusations a “coordinated online disinformation campaign” and said the ICE agent had no known affiliation with the newspaper’s chief executive. “We encourage people looking for factual information reported and written by trained journalists, not bots, to follow and subscribe to the Minnesota Star Tribune,” Iles said.

On Thursday, the Star Tribune and later The Post and other news organizations, identified the ICE agent that online detectives searched for as Jonathan Ross. They relied on old-fashioned, proven methods: human reporting and court documents.

The post See how AI images claiming to reveal Minneapolis ICE agent’s face spread confusion appeared first on Washington Post.