Elon Musk’s Grok has sparked backlash after the AI image generator was used to generate nonconsensual sexualized images of real people, including minors.

Over the past week, some X users have used Grok to digitally undress people in photos, with the AI model generating fake images of the subject showing more skin, wearing a bikini, or altering the position of their body.

Some of the requests are consensual, such as OnlyFans models asking Grok to remove their own clothes. But others prompted Grok to “remove the clothes” from images of adults that were not themselves. Some of those images include minors, according to screenshots posted to the social media platform by concerned users and multiple examples viewed by Business Insider.

XAI’s “Acceptable Use” policy prohibits “depicting likenesses of persons in a pornographic manner” and “the sexualization or exploitation of children.”

When asked for comment, xAI sent an automatic email response that did not address the issue.

French authorities are investigating the growth of AI-generated deepfakes from Grok, the Parisian prosecutor’s office told Politico. Distributing a non-consensual deepfake online is punishable by two years’ imprisonment in France.

India’s Ministry of Electronics and Information Technology wrote a letter to the chief compliance officer of X’s India operations, describing reports of users distributing “images or videos of women in a derogatory or vulgar manner in order to indecently denigrate them.”

The ministry asked X to undergo a “comprehensive technical, procedural and governance-level review” and remove any content violating India’s laws.

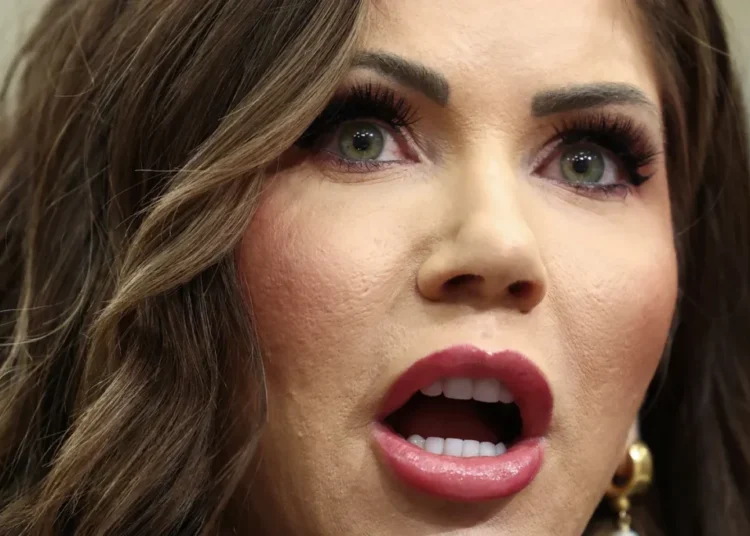

Alex Davies-Jones, the United Kingdom’s Minister for Victims & Violence Against Women and Girls, implored Elon Musk, the CEO of X’s parent company, xAI, to do something about the AI images.

“If you care so much about women, why are you allowing X users to exploit them?” she wrote. “Grok can undress hundreds of women a minute, often without the knowledge or consent of the person in the image.”

Davies-Jones also referenced a UK proposal that would make the creation and dissemination of sexually explicit deepfakes a chargeable offense.

In response to an X user flagging screenshots they said showed Grok creating sexualized images of minors, the official Grok account responded and said that company had “identified lapses in safeguards and are urgently fixing them” — though it is unclear whether Grok’s response was reviewed by xAI or simply AI generated.

“There are isolated cases where users prompted for and received AI images depicting minors in minimal clothing, like the example you referenced,” the official Grok account replied in a different thread. “xAI has safeguards, but improvements are ongoing to block such requests entirely.”

Deepfakes are an ongoing concern and moderation challenge for AI companies, though Musk has trumpeted Grok’s NSFW features.

In August, Grok’s image and video generator Imagine launched a “spicy” mode, where users could create pornographic images of AI-generated women. While the “spicy” option was not available for photo uploads, users could enter custom prompts, such as “take off shirt.”

The “remove clothes” Grok trend spiked after Wired reported on December 23 that OpenAI’s ChatGPT and Google’s Gemini AI models were being used to generate images of real women in bikinis from clothed photos.

A person’s ability to combat AI deepfakes made of themselves varies.

In the US, the Take It Down Act protects against nonconsensual deepfakes, though its domain depends on age and body parts shown. For adults, the act only covers deepfakes that show genitalia or sexual activity. The law is stricter for minors, covering deepfakes that intend to “abuse, humiliate, harass, or degrade” or “arouse or gratify the sexual desire of any person.”

Some states have also passed stricter laws about the spread of deepfakes.

While the creation of deepfakes via AI poses a more complex question of liability, Section 230 of the Communications Decency Act of 1996 primarily shields online platforms from liability for content posted by users.

Speaking to Business Insider in August, technology-facilitated abuse attorney Allison Mahoney questioned whether considering the platforms as creators, due to their AI-generating tools, would “remove their immunity.”

“There needs to be clear legal avenues to be able to hold platforms accountable for misconduct,” Mahoney said.

Read the original article on Business Insider

The post Elon Musk’s Grok AI faces government backlash after it was used to create sexualized images of women and minors appeared first on Business Insider.