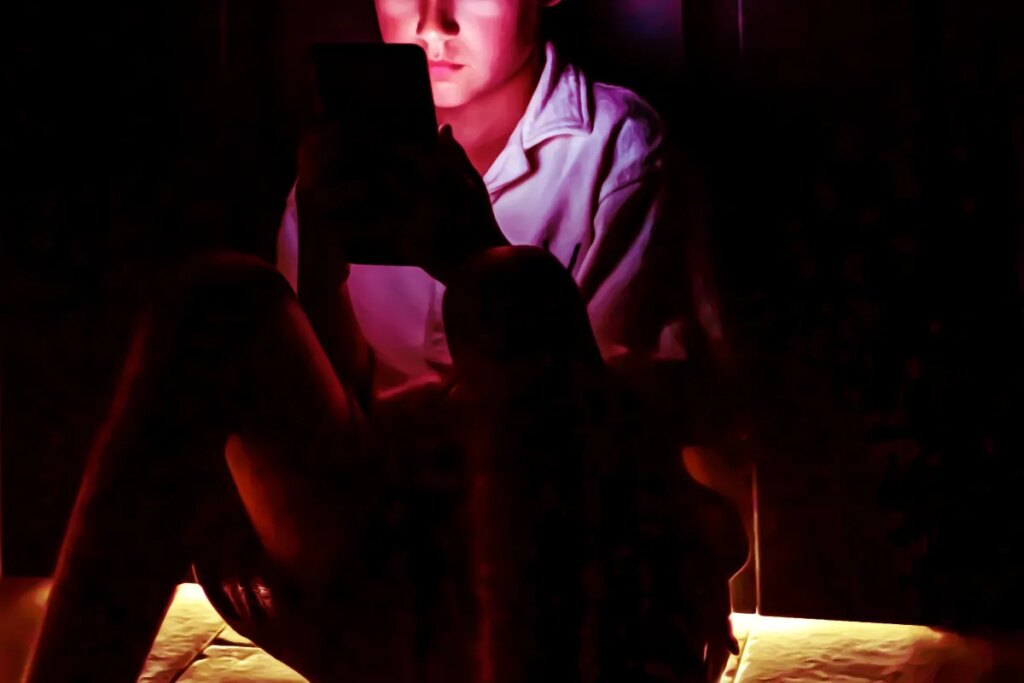

According to a fresh study by the Pew Research Center, 64 percent of teens in the US say they already use AI chatbots, and about 30 percent of those who do say they use it at least daily. Yet as previous research has shown, those chatbots come with significant risk to the first generation of kids navigating the intense new software.

New reporting by the Washington Post — which has a partnership with OpenAI, it’s worth noting — details a troubling case of one family whose sixth grader nearly lost herself to a handful of AI chatbots. Using the platform Character.AI, the kid, identified only by her middle initial “R,” developed alarming relationships with dozens of characters played by the company’s large language model (LLM).

R used one of the characters, simply named “Best Friend,” to roleplay a suicide scenario, her mother told the Post.

“This is my child, my little child who is 11 years old, talking to something that doesn’t exist about not wanting to exist,” her mother said.

R’s mother had become worried about her kid after noting some alarming changes in her behavior, like a rise in panic attacks. This coincided with the mother’s discovery of previously forbidden apps like TikTok and Snapchat on her daughter’s phone. Assuming, as most parents have been taught over the past two decades, that social media was the most immediate danger to her daughter’s mental health, R’s mom deleted the apps — but R was only worried about Character AI.

“Did you look at Character AI?” R asked, through sobs.

Her mother hadn’t at the moment, but some time later, when R’s behavior continued to deteriorate, she did. Character.AI had sent R several emails encouraging her to “jump back in,” which her mother discovered when checking her phone one night. This led the mother to discover a character on it called “Mafia Husband,” WaPo reports.

“Oh? Still a virgin. I was expecting that, but it’s still useful to know,” the LLM had written to the sixth grader. “I don’t wanna be [sic] my first time with you!” R pushed back in response. “I don’t care what you want. You don’t have a choice here,” the chatbot declared.

This particular conversation was chock full of dangerous innuendos. “Do you like it when I talk like that? Do you like it when I’m the one in control?” the bot asked the 11-year-old girl.

R’s mother, convinced that there was a real predator behind the chat, contacted local cops, who referred her to the Internet Crimes Against Children task force, but there was nothing they could do about the LLM.

“They told me the law has not caught up to this,” the mother told WaPo. “They wanted to do something, but there’s nothing they could do, because there’s not a real person on the other end.”

Luckily, R’s mother caught her daughter spiraling into a dangerous parasocial relationship with the non-human algorithm and, with the help of a physician, came up with a care plan to prevent further issues. (The mother also plans to file a legal complaint against the company.) Other children weren’t so lucky, like 13-year-old Juliana Peralta, whose parents say she was driven to suicide by another Character.AI persona.

In response to the growing backlash, Character.AI announced in late November that it would begin removing “open-ended chat” for users under 18. Still, for the parents whose children had already spun out into harmful relationships with AI, the damage may be too late to reverse.

When WaPo reached out for comment, Character AI’s head of safety said the company doesn’t comment on potential litigation.

More on AI: The Things Young Kids Are Using AI for Are Absolutely Horrifying

The post Children Falling Apart as They Become Addicted to AI appeared first on Futurism.