Think AI makes you smarter?

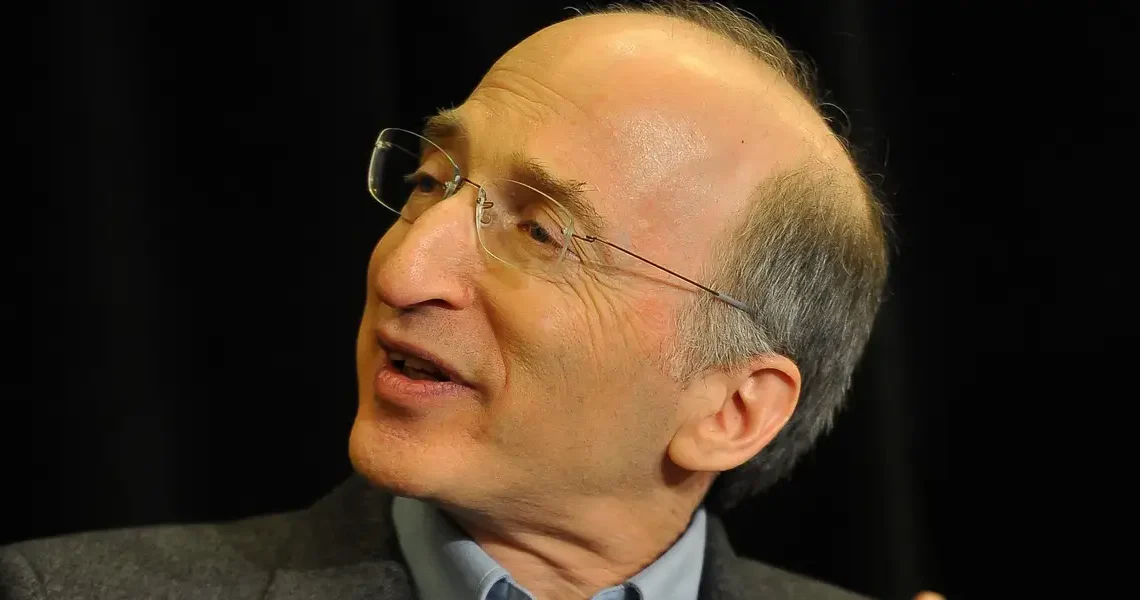

Probably not, according to Saul Perlmutter, a Nobel Prize-winning physicist who was credited for discovering that the universe’s expansion is accelerating.

He said AI’s biggest danger is psychological: it can give people the illusion they understand something when they don’t, weakening judgment just as the technology becomes more embedded in our daily work and learning.

“The tricky thing about AI is that it can give the impression that you’ve actually learned the basics before you really have,” Perlmutter said on a podcast episode with Nicolai Tangen, CEO of Norges Bank Investment Group, on Wednesday.

“There’s a little danger that students may find themselves just relying on it a little bit too soon before they know how to do the intellectual work themselves,” he added.

Rather than rejecting AI outright, Perlmutter said the answer is to treat it as a tool — one that supports thinking instead of doing it for you.

Use AI as a tool — not a substitute

Perlmutter said that AI can be powerful — but only if users already know how to think critically.

“The positive is that when you know all these different tools and approaches to how to think about a problem, AI can often help you find the bit of information that you need,” he said.

At UC Berkeley, where Perlmutter teaches, he and his colleagues developed a critical-thinking course centered on scientific reasoning, including probabilistic thinking, error-checking, skepticism, and structured disagreement, taught through games, exercises, and discussion designed to make those habits automatic in everyday decisions.

“I’m asking the students to think very hard about how would you use AI to make it easier to actually operationalize this concept — to really use it in your day-to-day life,” he said.

The confidence problem

One of Perlmutter’s concerns is that AI often speaks with far more certainty than it deserves and can be “overly confident” in what it says.

The challenge, Perlmutter said, is that AI’s confident tone can short-circuit skepticism, making people more likely to accept its answers at face value rather than question whether they’re correct.

That confidence, he said, mirrors one of the most dangerous human cognitive biases: trusting information that appears authoritative or confirms our existing beliefs.

To counter that instinct, Perlmutter said people should evaluate AI outputs the same way they would any human claim — weighing credibility, uncertainty, and the possibility of error rather than accepting answers at face value.

Learning to catch when you’re being fooled

In science, Perlmutter said, researchers assume they are making mistakes and build systems to catch them. For example, scientists hide their results from themselves, he said, until they’ve exhaustively checked for errors, thereby reducing confirmation bias.

The same mindset applies to AI, he added.

“Many of [these concepts] are just tools for thinking about where are we getting fooled,” he said. “We can be fooling ourselves, the AI could be fooling itself, and then could fool us.”

That’s why AI literacy also involves knowing when not to trust the output, he said — and being comfortable with uncertainty, rather than treating AI outputs as absolute truth.

Still, Perlmutter is clear that this isn’t a problem with a permanent solution.

“AI will be changing,” he said, “and we’ll have to keep asking ourselves: is it helping us, or are we getting fooled more often? Are we letting ourselves get fooled?”

Read the original article on Business Insider

The post A Nobel Prize-winning physicist explains how to use AI without letting it do your thinking for you appeared first on Business Insider.