On December 11, OpenAI release ChatGPT 5.2, the latest version of the widely used AI chatbot.

As it does every time it releases a minor update, the company hailed its latest version as a “significant improvement in general intelligence,” calling it the “best model yet for real-world, professional use.” In a further display of hubris, OpenAI even went so far as to claim 5.2 is their “first model that performs at or above a human expert level.”

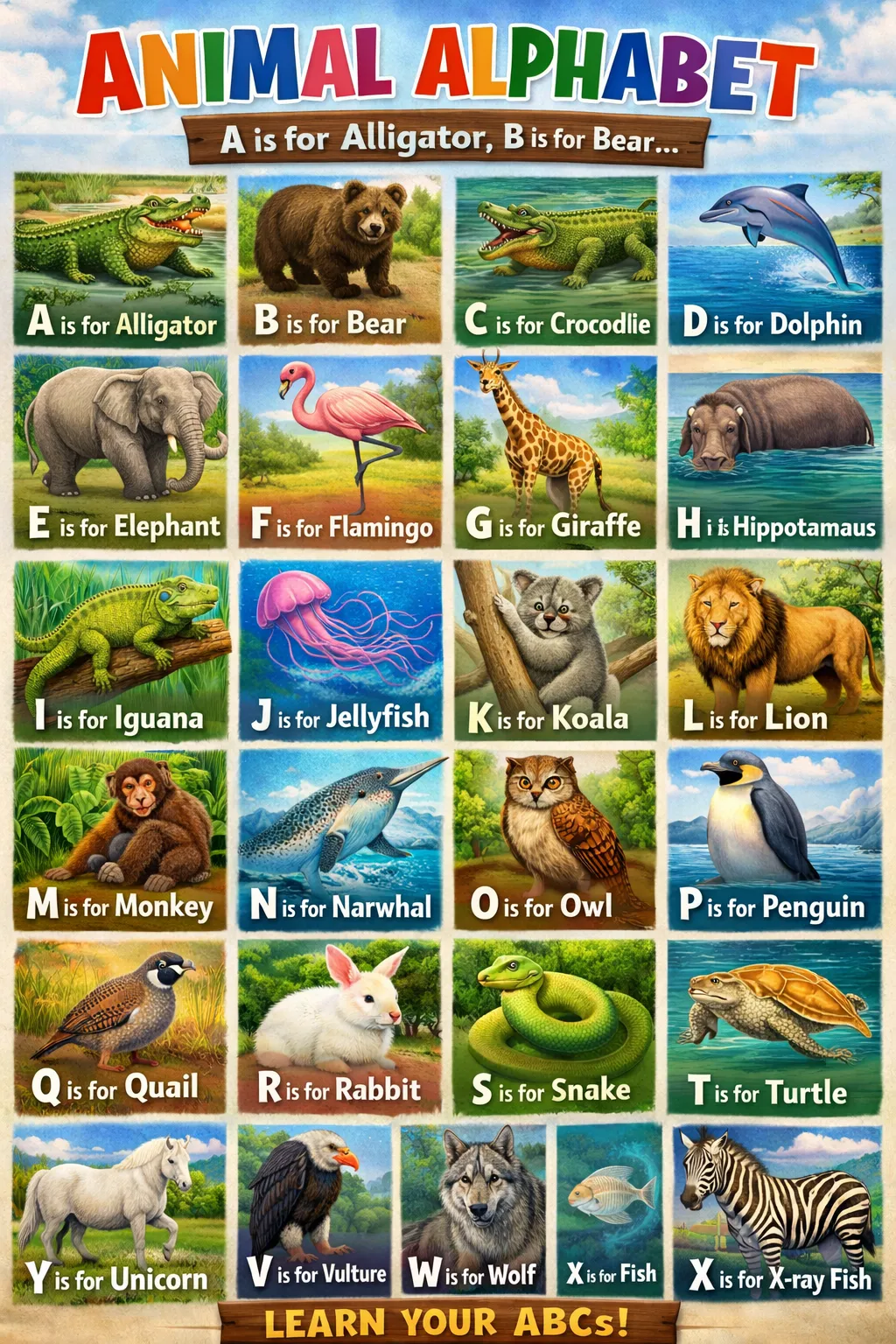

Yet when we ran it through an incredibly simple prompt — to generate an alphabetized chart of animals for school children — the world-beating AI model came up laughably short.

The ABC deficiency was first noticed by BCA Research chief global strategist Peter Berezin. Using ChatGPT 5.1, which was released back in November, Berezin asked the AI to “draw [a] poster where you say A is for an animal that starts with the letter A, B for an animal that starts with B, all the way to Z.”

That version thought for six seconds, and spat out an image with 25 letters, as opposed to the standard English alphabet which uses 26. Already off to a rocky start, 5.1 does an okay job with “A” through “I,” but falls off the rails as soon as it gets to “K,” which it says “is for Lion.”

It goes on like this: “O is for jellyfish,” while “Q is for penguin” and “R is for snake.” By the time it gets to the end, “Z” is for “urba” — a turtle, apparently — followed by “B,” along with a picture of a pig.

“Still needs more capex,” Berezin quips, referencing the $1.15 trillion OpenAI has committed to spending on hardware in 2025 alone.

Still needs more capex pic.twitter.com/a6YRYk7S24

— Peter Berezin (@PeterBerezinBCA) December 15, 2025

We were curious how ChatGPT 5.2 stacked up to its month-old predecessor — and sure enough, it didn’t disappoint.

Though the latest version of ChatGPT did a bit better with individual animals, it still only identified 24 letters in the English alphabet, forgetting “U” and “Z.” Instead, 5.2 listed “Y” for “Yak” right after “T.” This particular alphabet ends with “X,” which of course stands for “X-ray fish,” but is illustrated by a Zebra.

Its illustrations were also a bit suspect, with Kangaroos sporting a weird limb, an Iguana with two tails, a Narwal with a strange mash of eyes and fins as well as a bird’s beak, and a hedgehog with a cat’s face, to name a few weak points.

A follow-up prompt only spread the spill around. This time, there are 25 letters total. “Y” is still a problem area, taking the place of “U,” only this time it stands for “Unicorn,” which isn’t a real animal in the first place. By the end, there are two entries for “X,” one of which “is for fish,” followed by another “X,” again for “X-ray fish,” but with the same Zebra illustration.

The second poster also starts to scramble the instructions, injecting bits of the prompt into the poster title: “A is for alligator, B is for bear…”

As some users on X-formerly-Twitter pointed out, results are the same for Google’s Gemini, and as a quick glance at Grok shows, Elon Musk’s AI engine isn’t even close. But while ChatGPT might be the best at making an animal poster, it still comes up laughably short — and certainly not at the level of any “human expert” we’ve ever met.

More on ChatGPT: ChatGPT’s Dark Side Encouraged Wave of Suicides, Grieving Families Say

The post When Asked to Generate an Alphabet Poster for Preschoolers, the Latest Version of ChatGPT Just Flails Wildly appeared first on Futurism.