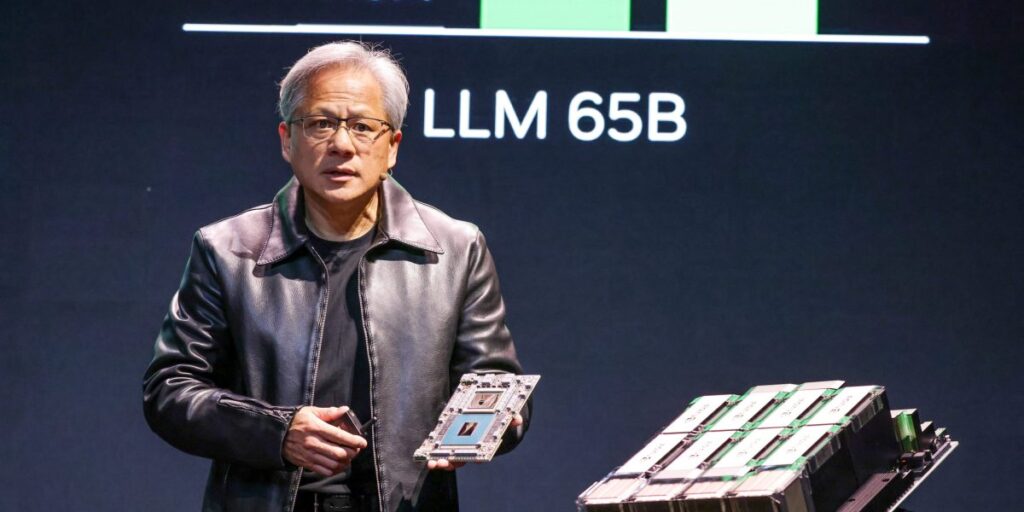

New AI chips seem to hit the market at a quicker pace as tech companies scramble to gain supremacy in the global arms race for computational power.

But that begs the question: What happens to all those older-generation chips?

The AI stock boom has lost a lot of momentum in recent weeks due, in part, to worries that so-called hyperscalers aren’t correctly accounting for the depreciation in the hoard of chips they’ve purchased to power chatbots.

Michael Burry—the investor of Big Short fame who famously predicted the 2008 housing collapse—sounded the alarm last month when he warned AI-era profits are built on “one of the most common frauds in the modern era,” namely stretching the depreciation schedule. He estimated Big Tech will understate depreciation by $176 billion between 2026 and 2028.

But according to a note last week from Alpine Macro, chip depreciation fears are overstated for three reasons.

First, analysts pointed out software advances that accompany next-generation chips can also level up older-generation processors. For example, software can improve the performance of Nvidia’s five-year-old A100 chip by two to three times compared to its initial version.

Second, Alpine said the need for older chips remains strong amid rising demand for inference, meaning when a chatbot responds to queries. In fact, inference demand will significantly outpace demand for AI training in the coming years.

“For inference, the latest hardware helps but is often not essential, so chip quantity can substitute for cutting-edge quality,” analysts wrote, adding Google is still running seven- to eight-year-old TPUs at full utilization.

Third, China continues to demonstrate “insatiable” demand for AI chips as its supply “lags the U.S. by several generations in quality and severalfold in quantity.” And even though Beijing has banned some U.S. chips, the black market will continue to serve China’s shortfalls.

Meanwhile, not all chips used in AI belong to hyperscalers. Even graphics processors contained in everyday gaming consoles could work.

A note last week from Yardeni Research pointed to “distributed AI,” which draws on unused chips in homes, crypto-mining servers, offices, universities, and data centers to act as global virtual networks.

While distributed AI can be slower than a cluster of chips housed in the same data center, its network architecture can be more resilient if a computer or a group of them fails, Yardeni added.

“Though we are unable to ascertain how many GPUs were being linked in this manner, Distributed AI is certainly an interesting area worth watching, particularly given that billions are being spent to build new, large data centers,” the note said.

The post What happens to old AI chips? They’re still put to good use and don’t depreciate that fast, analyst says appeared first on Fortune.