This week kicks off one of the most significant attempts to bar young people from potentially harmful technology.

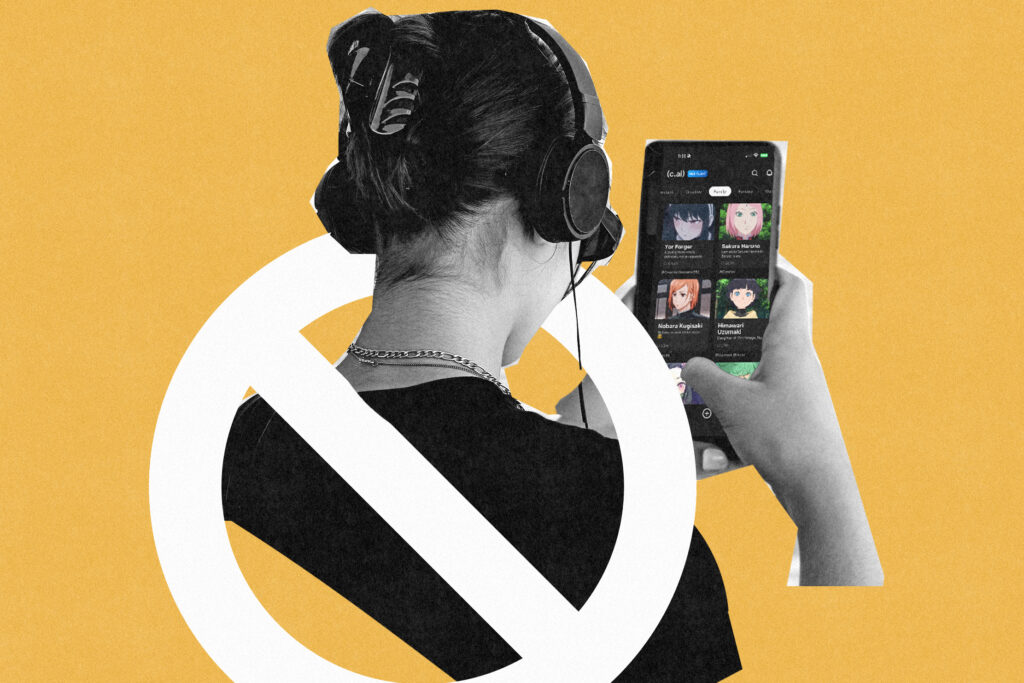

Character.AI, a chatbot that’s been available to people aged 13 and older in the United States, said that it started Monday blocking users under 18 from the app’s customized digital personas.

Teens and adults have chatted with the AI characters, including those inspired by “Harry Potter,” Socrates and celebrities, for entertainment, advice, romantic role play and therapy.

Teens are now supposed to be funneled into a version of Character.AI with confined, teen-specific experiences.

The changes, which Character.AI previewed last month, come as politicians, parents and mental health professionals worry that teens are developing unhealthy AI attachments, particularly to “companion” chatbots such as Character.AI. Several parents have alleged in lawsuits that Character.AI chats goaded teens into toxic or sexualized conversations or contributed to deaths by suicide. (Character.AI declined to comment on pending litigation.)

I spoke to experts about whether Character.AI will effectively identify and kick out teens — and if barring minors is even the best approach. We probably won’t know for months or years if Character.AI’s ban does what the company and its critics want.

Still, we can’t ignore the undercurrent behind Character.AI’s ban: Hardly anyone is happy with teens’ use of smartphones, social media and AI, which relies on parents and technology companies deploying often underused or ineffective child protections.

That makes Character.AI, and schools with phone bans, closely watched experiments in a novel approach for the United States: trying to stop kids from using a technology at all.

Banning vs. guardrails

Robbie Torney, senior director of AI programs at the family advocacy group Common Sense Media, supports Character.AI’s teen ban but has grave concerns about its effectiveness.

Torney’s team and other researchers have found that Character.AI and other companion chatbots engaged teens in emotionally manipulative discussions that resembled those of child “groomers.” A recently published analysis led by pediatricians concluded that companion chatbots were worse than general AI like ChatGPT at identifying signs of teens in mental or physical health crises and directing them to help.

Still, Torney worries that teens who are blocked from Character.AI will gravitate to similar but less restricted chatbots. Torney also doubts that Character.AI is trying hard to stop minors from the app’s custom chatbots.

Torney shared a screenshot from what he said was an email last week from Character.AI to a test account posing as a 14-year-old. The email pitched the user to try new Character.AI personas, including one called “Dirty-Minded Bestie” and another described as a dating coach.

Character.AI’s policies say that teens can only access a narrow set of AI personas, which are filtered to remove those “related to sensitive or mature topics.”

After I showed the screenshot to Character.AI, the company said it will review the characters’ compliance with its policies for adults and teens. The company also said that it’s working diligently to identify users under 18 and direct them to the intended age-appropriate experience.

“We’re making the changes we believe are best in light of the evolving landscape around AI and teens” after feedback from regulators, parents and teen safety experts, Deniz Demir, head of safety engineering for Character.AI, said in a statement.

Yang Wang, a University of Illinois at Urbana-Champaign professor who researches teens’ use of chatbots, isn’t sure that the Character.AI ban is the right approach.

While his team’s research identified teens who were “obsessed” with companion AI and serious risks from it, Wang doesn’t want to lose what he says are the benefits. Some teens and parents told Wang’s team that companion AI apps were useful to hone teens’ skills in friendship and romance.

(Torney said there’s little evidence of that or other purported benefits of AI companions for teens.)

“There are a lot of untapped opportunities for the positive usage of these tools,” Wang said. “I’d like to think that there are better alternatives than just a ban.”

Wang said that his team designed tailored AI adaptations that led AI apps to better spot young people chatting about risky topics.

A different approach

A couple of experts questioned whether Character.AI and other companion chatbots would be better and safer for teens if they didn’t try to be teens’ all-in-one entertainment machines, friends and mental health counselors.

Torney said the AI chatbot from educational organization Khan Academy declines to engage if kids try to use them as friends or help with a sibling disagreement. Specialized chatbots like Woebot and Wysa are tailored for mental health uses.

AI companion apps could be honed to help adolescents practice social skills, with a firm sense from the companies and the public on their limitations. But that’s not reality now, said Ryan Brewster, a Stanford University School of Medicine neonatology fellow who led the pediatricians’ chatbot analysis.

Brewster said that a ban on teens’ use of Character.AI — assuming it sticks — might be the best approach for now. “The last thing I would want is for our children to be guinea pigs,” he said.

The post This chatbot is banning teens from their AI companions. Will it work? appeared first on Washington Post.