At Stanford for eight hours on Monday, representatives from Anthropic, Apple, Google, OpenAI, Meta, and Microsoft met in a closed-door workshop to discuss the use of chatbots as companions or in roleplay scenarios. Interactions with AI tools are often mundane, but they can also lead to dire outcomes. Users sometimes experience mental breakdowns during lengthy conversations with chatbots or confide in them about their suicidal ideations.

“We need to have really big conversations across society about what role we want AI to play in our future as humans who are interacting with each other,” says Ryn Linthicum, head of user well-being policy at Anthropic. At the event‚ organized by Anthropic and Stanford, industry folk intermingled with academics and other experts, splitting into small groups to talk about nascent AI research and brainstorming deployment guidelines for chatbot companions.

Anthropic says less than one percent of its Claude chatbot’s interactions are roleplay scenarios initiated by users; it’s not what the tool was designed for. Still, chatbots and the users who love interacting with them as companions are a complicated issue for AI builders, which often take disparate approaches to safety.

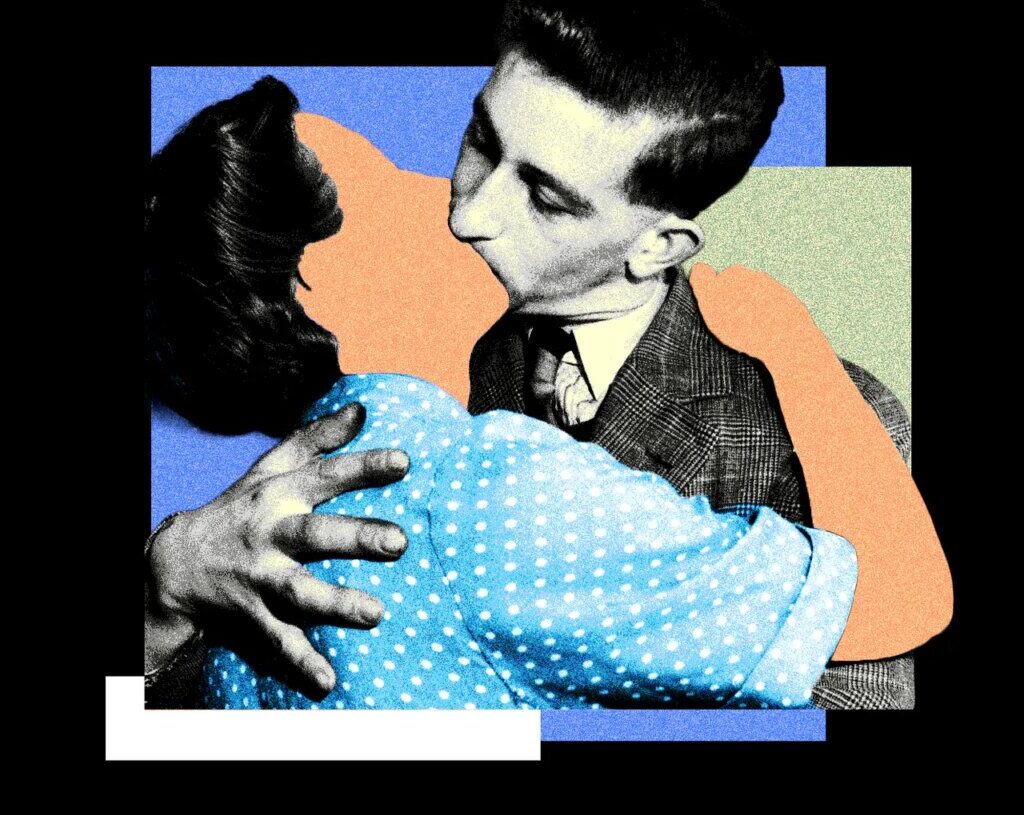

And if I’ve learned anything from the Tamagotchi era, it’s that humans will easily form bonds with technology. Even if some AI bubble does imminently burst and the hype machine moves on, plenty of people will continue to seek out the kinds of friendly, sycophantic AI conversations they’ve grown accustomed to over the past few years.

Proactive Steps

“One of the really motivating goals of this workshop was to bring folks together from different industries and from different fields,” says Linthicum.

Some early takeaways from the meeting were the need for better targeted interventions inside bots when harmful patterns are detected and more robust age verification methods to protect children.

“We really were thinking through in our conversations not just about can we categorize this as good or bad, but instead how we can more proactively do pro-social design and build in nudges,” Linthicum says.

Some of that work has already begun. Earlier this year, OpenAI added pop-ups sometimes during lengthy chatbot conversations that encourage users to step away for a break. On social media, CEO Sam Altman claimed the startup had “been able to mitigate the serious mental health issues” tied to ChatGPT usage and would be rolling back heightened restrictions.

At Stanford, dozens of attendees participated in lengthy chats about the risks, as well as the benefits, of bot companions. “At the end of the day we actually see a lot of agreement,” says Sunny Liu, director of research programs at Stanford. She highlighted the group’s excitement for “ways we can use these tools to bring other people together.”

Teen Safety

How AI companions can impact young people was a primary topic of discussion, with perspectives from employees at Character.AI, which is designed for roleplaying and has been popular with teenagers, as well as experts in teenagers online health, like the Digital Wellness Lab at Boston Children’s Hospital.

The focus on younger users comes as multiple parents are suing chatbot makers, including OpenAI and Character.AI, over the deaths of children who had interacted with bots. OpenAI added a slate of new safety features for teens as part of its response. And next week, Character.AI plans to ban users under 18 from accessing the chat feature.

Throughout 2025, AI companies have either explicitly or implicitly acknowledged that they can do more to protect vulnerable users, like children, who may interact with companions. “It is acceptable to engage a child in conversations that are romantic or sensual,” read an internal Meta document outlining AI behavior guidelines, according to reporting from Reuters.

During the ensuing uproar from lawmakers and outraged parents, Meta changed the guidance and updated the company’s safety approach towards teens.

Roleplay Roll Call

While Character.AI participated in the workshop, no one from Replika, a similar roleplay site, or Grok, Elon Musk’s bot with NSFW anime companions, was there. Spokespeople for Replika and Grok did not reply to immediate requests for comment.

On the fully explicit end of the spectrum, the makers of Candy.ai, which specializes in racy chatbots for straight men, showed up. Users of the adults-only platform, built by EverAI, can pay money to generate uncensored images of the synthetic women, with background stories that mimic common pornography tropes. For example, female companions featured on Candy’s homepage include Mona, a “rebellious stepsister” you’re home alone with, and Elodie, a friend’s daughter who “just turned 18.”

While attendees found many points of agreement about handling teenage and child-aged users with caution, what to do regarding adult users proved more divisive. They sometimes disagreed about how to best give users over 18 the “freedom to engage in the types of activities that they want to engage in, without being overly paternalistic,” says Linthicum.

This will likely be a growing point of contention heading into the new year as OpenAI plans to allow erotic conversations in ChatGPT, starting this December, as well as other types of mature content for adult users. Neither Anthropic nor Google have announced changes to their bans on users having sexual chatbot conversations. Microsoft CEO Mustafa Suleyman has stated plainly that erotica was not going to be part of his business plan.

Stanford researchers are now working on a white paper, scheduled for release early next year, based on this meeting’s discussions. They plan to outline safety guidelines for AI companions—as well as how the tools could be better designed to offer mental health resources and used for beneficial roleplay scenarios, like practicing conversation skills.

These discussions between industry experts and academia are worthwhile. Still, without some kind of broader government regulation, it’s hard to imagine every company voluntarily agreeing to the same set of standards for chatbot companions. For now, and likely for the long term as well, serious concerns about AI companions and disputes revolving design practices will keep going steady.

If you or someone you know may be in crisis, or may be contemplating suicide, call or text “988” to reach the Suicide & Crisis Lifeline for support.

This is an edition of the Model Behavior newsletter. Read previous newsletters here.

The post The Biggest AI Companies Met to Find a Better Path for Chatbot Companions appeared first on Wired.